AIOS

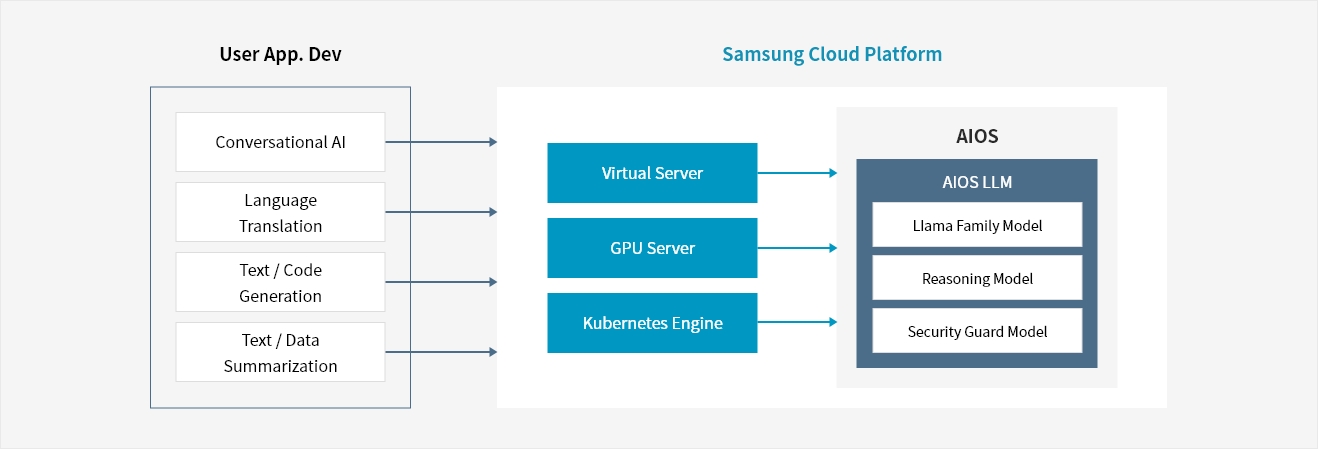

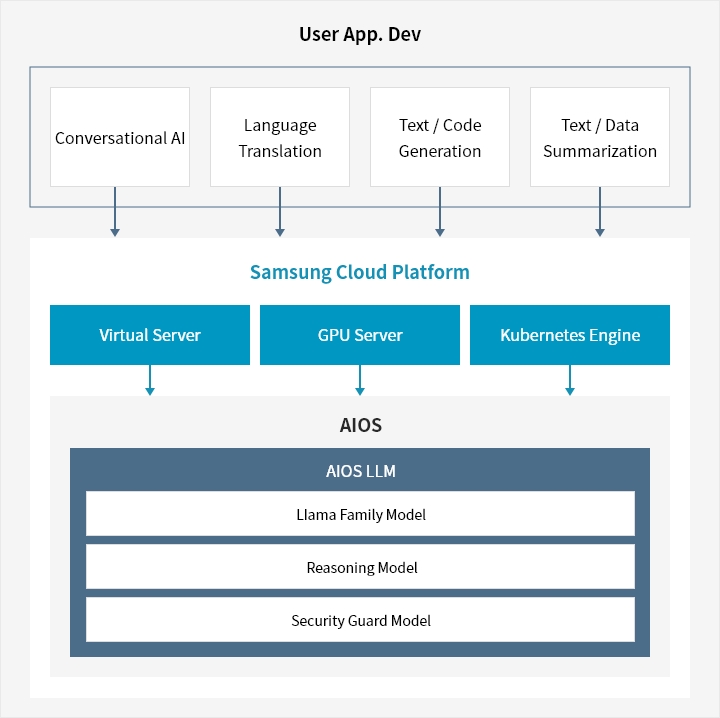

LLM Services Available Directly from Generated Resources

Overview

-

Conveniently Use of LLM

AIOS provides LLM endpoints to Virtual Servers, GPU Servers, and nodes of Kubernetes Engine created in Samsung Cloud Platform. With GPU-based AIOS LLM, users can use LLM services immediately after resource deployment.

-

Improving AI Development Productivity

AI developers can significantly improve the productivity and speed of AI application development by receiving APIs and SDKs based on Python and REST API, which are developer-friendly interfaces of AIOS.

-

Providing Various AIOS Models

AIOS provides various AIOS models for LLM response generation, including natural language processing such as text generation, question answering, summarization, safety check of generated text, text similarity calculation or information retrieval, and re-ranking of search results or recommended items.

-

Efficient Cost Management

AIOS can optimize cloud costs by using LLM resources when needed.

Service Architecture

- Virtual Server → AIOS

- GPU Server → AIOS

- Kubernetes Engine → AIOS

Key Features

-

Provide AIOS LLM endpoint

- Automatic provision of LLM private endpoint in the detailed inquiry screen of created Virtual Server, GPU Server, and Kubernetes Engine service resources

-

Provide visualized reports

- Can check the number of calls and token usage by service type, resource, and model

- View full call statistics by LLM model

-

AIOS compatibility

- Supports compatibility with OpenAI and LangChain SDK, allowing easy integration with existing development environments or frameworks

Whether you’re looking for a specific business solution or just need some questions answered, we’re here to help