Multi-node GPU Cluster

Multiple GPUs for Large-Scale, High-Performance AI Computing

Overview

-

Easy-to-use GPU Architecture

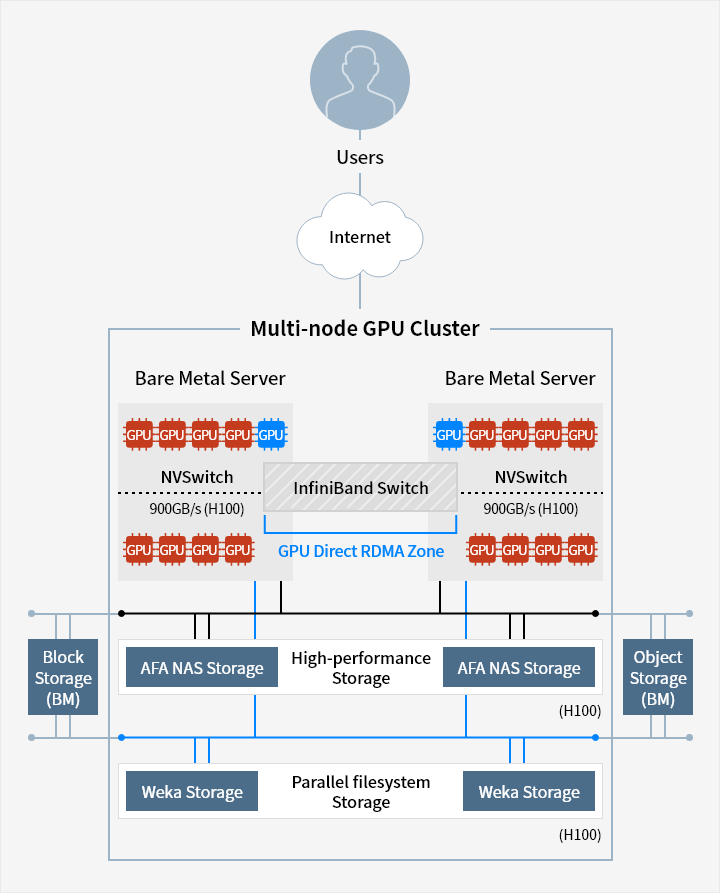

Multi-node GPU Cluster uses Bare Metal Server, which is embedded with highperformance NVIDIA SuperPOD architecture. Using the GPU, it handles multiple user jobs or high-performance distributed workload of large-scale AI model learning.

-

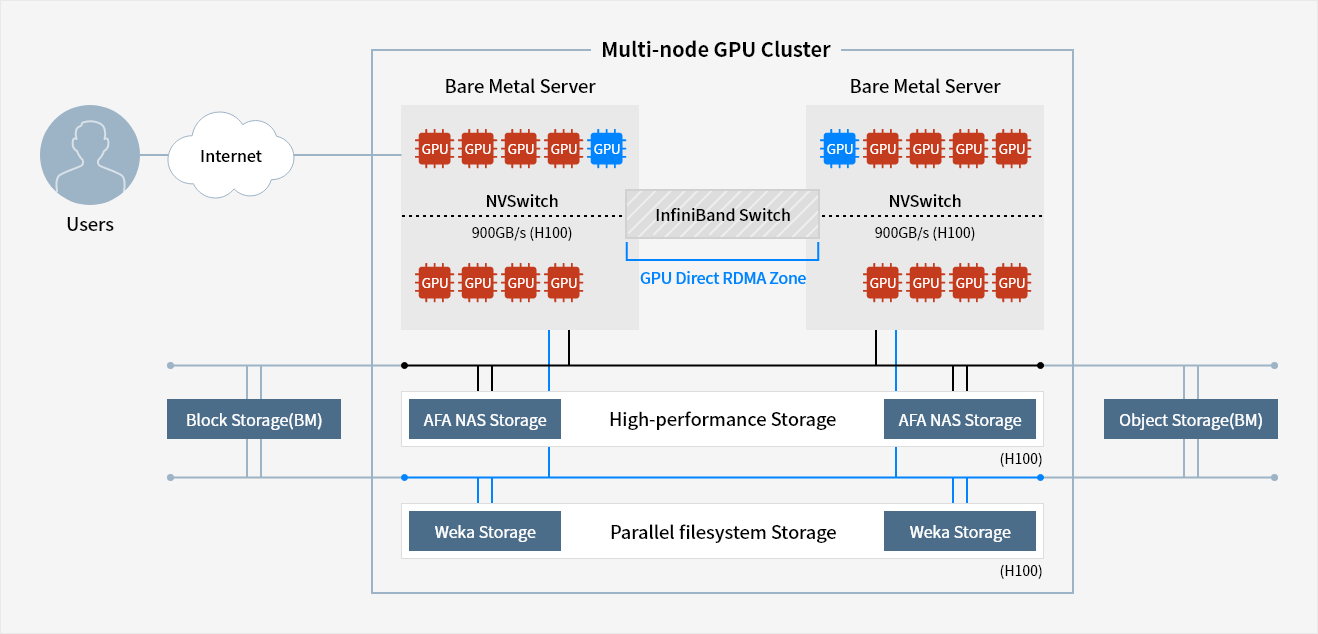

Integration with High-Performance Network

Through integration with the network resources of Samsung Cloud Platform, Multinode GPU Cluster can handle high-performance AI jobs. By configuring GPU direct RDMA (Remote Direct Memory Access) using InfiniBand switch, it directly processes data IO between GPU memories, enabling high-speed AI/Machine learning computation.

-

Integration with High-Performance Storage

Multi-node GPU Cluster supports integration with various storage resources on the Samsung Cloud Platform.

A high-performance SSD NAS File Storage directly integrated with high-speed network or NVMe parallel filesystem storage is available for use, and integration with Block Storage and Object Storage is possible.

Service Architecture

Block Storage(BM) - Multi-node GPU Cluster[ AFA NAS Storage- High-Performance Storage - AFA NAS Storage(H100)] - Objeact Storage(BM)

Block Storage(BM) - Multi-node GPU Cluster[ Weka Storage- High-Performance Storage - Weka Storage(H100)] - Objeact Storage(BM)

Multi-node GPU Cluster

NVSwitch

900GB/s (H100)

GPU GPU GPU GPU

NVSwitch

900GB/s (H100)

GPU GPU GPU GPU

Key Features

-

Create/manage GPU Bare Metal Server

- Standard GPU Bare Metal Server with 8 NVIDIA GPUs

※ Internal NVMe disk, NVIDIA NVSwitch and NVIDIA NVLink - Provide OS standard image of RDMA SW Stack (OS : Ubuntu)

- Standard GPU Bare Metal Server with 8 NVIDIA GPUs

-

High performance processing

- Configure GPU direct RDMA environment using InfiniBand switch

- Provide high-performance SSD File Storage (H100)

- Provide NVMe parallel filesystem storage(H100)

-

Storage and network integration

- Provide additional storage(Block, Object, File) and network connection on top of an OS disk

- Integration setting for subnet/IP and VPC Firewall

Whether you’re looking for a specific business solution or just need some questions answered, we’re here to help