Did a Java App Suddenly Shut Down?

It's because of NPE

– Samsung SDS's Technology for Automatically Detecting Null Pointer Exceptions

This paper introduces NpeTest, a unit test generation technique specifically designed to effectively find Null Pointer Exceptions (NPEs), one of the most prevalent and critical errors in Java applications. While existing automatic unit test generation tools such as Randoop and EvoSuite focus on improving code coverage, they are not sufficiently effective at catching NPEs. NpeTest employs a strategy that combines static and dynamic analysis to guide the test case generator in targeting scenarios likely to trigger NPEs. Through experiments conducted on 108 NPE benchmarks collected from 96 real-world projects, NpeTest demonstrated a significant improvement in NPE detection reproducibility, achieving a rate of 78.9%, which is 38.7% increase compared to EvoSuite's 56.9%. Furthermore, NpeTest successfully detected 89 previously unknown NPEs from an industry project.

Effective Unit Test Generation for Java Null Pointer Exceptions

👉 See the Publication

NPE, Java Developer’s Nightmare

Null Pointer Exceptions (NPEs) are one of the most common and critical errors in Java applications. NPE is a critical software defect because dereferencing a null pointer always makes the program crash, causing undefined behavior of the entire system. According to recent industry reports, NPEs account for the most significant portion of the reported crashes in Java applications, making software testing a mandatory to reduce the risk of NPEs during the software development process.

The Complexity of Unit Testing and the Difficulty of Detecting NPEs

Unit testing has been one of the most widely used software testing techniques for object-oriented programming

languages such as Java. With well-designed test cases, unit testing validates that each unit of software performs as

expected and identifies bugs. However, finding bug-triggering unit tests is a complex and time-consuming task, which

becomes more difficult with respect to the size and complexity of software systems.

To reduce the burdens of developers on designing unit tests, automatic test case generation techniques have been

proposed with two major approaches: random testing and search-based software testing. Both methods generate test

cases by automatically synthesizing method call sequences and other elements for the target unit.

However, we observed that unit test automatic generation tools such as Randoop and EvoSuite are not sufficiently

effective at catching NPEs. These unit testing techniques primarily strive for high code coverage, but achieving higher

code coverage does not necessarily result in better NPE finding performance. This is because software bugs, especially

NPEs, usually occur under certain conditions

Test Case Generation Failures Through NPE Example

We will analyze the problem using an NPE found in Apache Qpid Proton-j project.

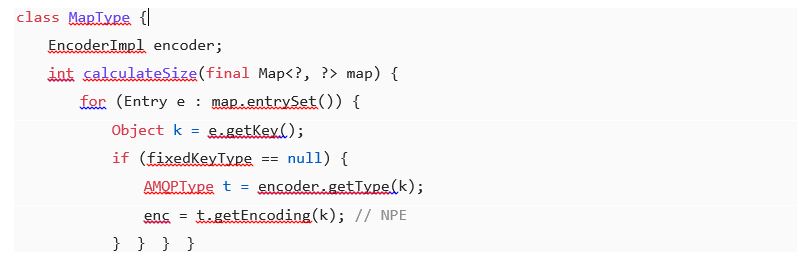

// MapType.java

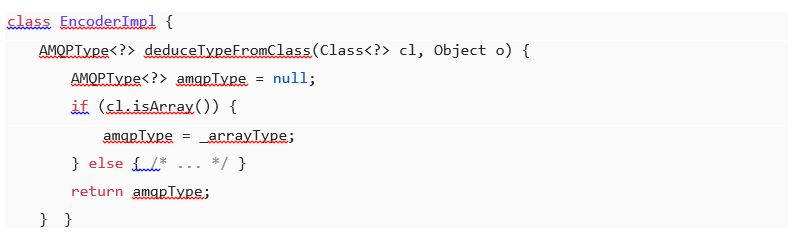

// EncoderImpl.java

The root cause of this NPE is the null literal assigned to the variable amqpType in the deduceTypeFromClass method,

which is returned without refinement. The NPE occurs in the calculateSize method during the call to t.getEncoding(k),

where the getType method internally calls deduceTypeFromClass and returns the result directly.

However, the conditions under which the variable amqpType is not refined during the execution are not trivial. For

this to occur, the type of the first argument should be set properly, which is determined by the argument of the

calculateSize method. Additionally, the input map of the calculateSize method must contain at least one element.

In order to generate such test cases triggering the NPE, unit test generation tools must focus on mutating various

types for generic type parameters of Map and find an appropriate one that bypasses the branch conditions in

deduceTypeFromClass not to refine the value of amqpType. However, tools such as EvoSuite and Randoop failed to

generate such test cases due to the large space of test cases and statements to be mutated.

Samsung SDS's Solution: "NpeTest" for Generating Test Cases to Detect NPEs More Effectively

NpeTest employs both static and dynamic analysis to generate test cases for better NPE detection.

Search-Based Software Testing

NpeTest relies on EvoSuite, a search-based software testing (SBST) tool. The simplified test case generation process of EvoSuite is as follows:

- Identifies coverage goals

- Builds an initial parent population

- Generates offspring population from the parent population

- Computes fitness values for all test cases

- Selects the next parent test cases

- Updates coverage goals

- Repeats until the time budget is exhausted

- Returns the set of test cases as the final solution

The workflow of NpeTest is as follows:

- Performs static analysis on the given class

- Computes NPE goals

- Collects NPE functions

- Builds an initial population

- Generates NPE test cases

- Updates the population based on the NPE detection coverage goals

- Refines the methods

- Computes test case scores

- Repeats until the time budget is exhausted

- Returns the set of test cases as the final solution

Static Analysis

The static analyzer is to (1) identify all NPE-prone regions and the methods in a class under test (CUT) and (2) to

prioritize the statements to be mutated in a given test case.

Path Construction. We first construct a control flow graph (CFG) of each method and compute a set of target

expressions for each method based on the CFG. Using these set target expressions, we classify whether a method is NPE-safe.

Nullable Path Identification. We analyze whether the target expression can be null in a given path. If the expression

remains false for all paths, we can conclude that NPE never occurs when dereferencing that expression.

NPE-likely Score Computation. We compute the NPE-likely score for the given method. This score is later used for test

case selection during mutation.

Mutation Target Selection. When given a test case for mutation through mutation target selection, NPE test selects

statements and variables that can trigger NPEs instead of randomly selecting statements to be mutated.

Dynamic Analysis

The goal of dynamic analysis is to guide the mutation generation process to actively explore NPE-prone areas by

monitoring the execution results of test cases.

Method Under Test refinement. NpeTest dynamically refines the set of methods under test using the information of

runtime exceptions. If an NPEs occur during test case execution, NpeTest gathers the information of the method and

the NPE0triggered error location, and removes the corresponding target expression.

Testcase-level NPE-likely Score Computation. NpeTest calculates and maintains testcase-level NPE- likely score using

the aforementioned sequence of executed method calls. All test cases are annotated with the computed score, and

NpeTest performs weighted sampling based on the score to select the test case from the population to be mutated.

Experimental Results and Applicability

Evaluation Setting

We implemented NpeTest on top of the latest version of EvoSuite, which was last updated on GitHub in February

2024. For performance comparison, we selected EvoSuite and Randoop as baselines. We conducted 25 evaluation

experiments for each tool with a time budget of 5 minutes on the benchmark classes.

We collected real-world NPE benchmarks from the literature, resulting in a total of 96 buggy projects with 108 known

NPEs.

Effectiveness of NpeTest

In terms of the average reproduction rates over 25 trials for generating NPE-triggering test cases, NpeTest successfully generated test cases detecting the known NPEs with 45.2% and 22.4% more reproduction rates than Randoop and EvoSuite, respectively. In terms of the number of NPEs detected in any of the 25 trials, NpeTest found 73 NPEs, while Randoop and EvoSuite detected 25 and 59 NPEs, respectively.

Correlation between Code Coverage and NPE Detection

To observe the correlation between code coverage and NPE detection ability, we evaluated EvoSuite with different

options. The fine-tuned option significantly improved the performance of code coverage. EvoSuite achieved line

coverage of 77.8% on average, compared to 64.5% with default options, representing a 20.8% improvement. However,

regarding the reproduction rate of NPE detection, even with the fine-tuned options, the improvement was minimal—

rising only from 55.7% to 56.9%, an increase of just 2.2%.

Interestingly, NpeTest demonstrated the best performance on NPE detection, as shown in Table 2, but achieved less

code coverage than EvoSuite and EvoSuite with default options. The reason for low line coverage is that NpeTest has

smaller search space (i.e., methods under test) than EvoSuite.

Industrial Case Study

To compare the practical feasibility, we conducted a case study focusing on a proprietary cryptographic library used within an IT company. This library consists of 84 public classes and 13,669 lines of code, with a 76% line coverage achieved through manually written unit tests

Surprisingly, the tools revealed a total of 91 previously unknown NPEs, all of which were confirmed as true positives by the library development team. NpeTest found 89 NPEs, including 9 that EvoSuite missed and 37 Randoop missed. On the other hand, EvoSuite and Randoop detected 82 and 52 NPEs, respectively, with EvoSuite finding only 2 additional NPEs not detected by NpeTest.

Significance of the Research and Conclusion

Lessons Learned

The current unit test generators are not sufficient for NPE detection. EvoSuite failed to detect NPEs that could easily

be detected by Randoop, and could only detect 59 out of a total of 108 NPEs in total. In contrast, NpeTest could detect

73 unique NPEs.

Achieving high code coverage is not necessary to improve NPE detection capability. While fine-tuned option

parameters of EvoSuite increased the achieved line coverage by 20.8%, it could only improve the reproduction rate of

NPE detection by 2.2%. In contrast, NpeTest achieved 18.8% less line coverage than EvoSuite but was able to detect

15 more unique NPEs that EvoSuite failed to find, and showed a 22.4% higher reproduction rate on average.

Adopting an integrated approach to detect NPEs in industrial software development is important. The case study

emphasizes the importance of adopting a comprehensive approach to detecting NPEs in industrial software

development. Despite the rigorous testing and development process in place, the three subject tools were able to

detect a significant number of previously unknown NPEs.

Limitation

Of course, NpeTest has inherent limitations in detecting other types of bugs than NPEs. Through static and dynamic analysis, NpeTest intentionally skips testing methods that are free of NPEs or those for which all NPEs have been detected throughout the testing process, potentially missing bugs that exist in the skipped methods.

Threats to Validity

To evaluate the best performance of EvoSuite, we used a set of fine-tuned options from SBST'22. However, these

values for options may not be appropriate to achieve the best performance of EvoSuite on some of our benchmarks.

We eliminated the programs we failed to build in our experiment settings. The experiment results may become

different from what we observed in our experiment if those programs were properly built and used for our evaluation.

We conducted our experiments for 5 minutes on each benchmark with 25 trials. The time-budget for experiments may

not be sufficient to achieve the best performance for both EvoSuite and NpeTest.

Conclusion

In this paper, we shared our experience on enhancing automatic unit test generation to more effectively find

Java null pointer exceptions (NPEs). NPEs are among the most common and critical errors in Java

applications, however, existing unit test generation tools such as Randoop and EvoSuite are not sufficiently effective at catching NPEs.

Their primary strategy of achieving high code coverage does not necessarily result in triggering diverse NPEs

in practice. In this paper, we detailed our observations on the limitations of current state-of-the-art unit testing

tools in terms of NPE detection and introduced a new strategy to improve their effectiveness.

Our strategy utilizes both static and dynamic analysis to guide the test case generator to focus specifically on

scenarios that are likely to trigger NPEs. We implemented this strategy on top of EvoSuite and evaluated our

tool, NpeTest, on 108 NPE benchmarks collected from 96 real-world projects.

The results showed that our NPE-guidance strategy can increase EvoSuite's reproduction rate of the NPEs

from 56.9% to 78.9%, a 38.7% improvement. Furthermore, NpeTest successfully detected 89 previously

unknown NPEs from an industrial project.

👉 See the Publication