The Secret to Future Data Centers That Can Handle Heavy Traffic Without a Hitch: ‘eNLB’

– Innovation in High-Performance Software Load Balancer Technology

Samsung SDS has developed 'eNLB,' a high-performance load balancer capable of processing millions of packets per second without kernel bottlenecks. The core innovation of eNLB lies in its eBPF-based kernel bypass technology and a dynamic load balancing algorithm that effectively resolves runtime traffic imbalances. This technology reduces network latency, significantly enhances resource utilization, and delivers transformative improvements in service stability and response speed.

Load Balancing, Why Is It Gaining Attention Again?

The widespread adoption of IT devices and the rise of diverse AI-based services have accelerated the explosive growth of network traffic. Services managing massive amounts of traffic often rely on server pools comprising tens to hundreds of servers. The technology responsible for distributing user traffic efficiently and stably across these servers is called load balancing. While hardware load balancers are widely used in data centers, there is a growing demand for high-performance software load balancers capable of maximizing packet processing throughput per unit of time.

Traditional software load balancers process packets within the kernel's network stack, resulting in performance degradation due to memory copying between kernel and user spaces and interrupt handling. Additionally, to efficiently distribute the large volume of packets received by the load balancer, it is crucial to evenly distribute user requests across servers, ensuring no single server becomes overwhelmed.

Samsung SDS’s Solution: eBPF-Based ‘eNLB’

This study introduces an eBPF Network Load Balancer (eNLB), which enhances performance by delivering packets from the eXpress Data Path (XDP) to an extended Berkeley Packet Filter (eBPF) program before large traffic input into the load balancer is processed by the kernel's network stacks, and it employs Dynamic Load Balancing to uniformly distribute packets across servers.

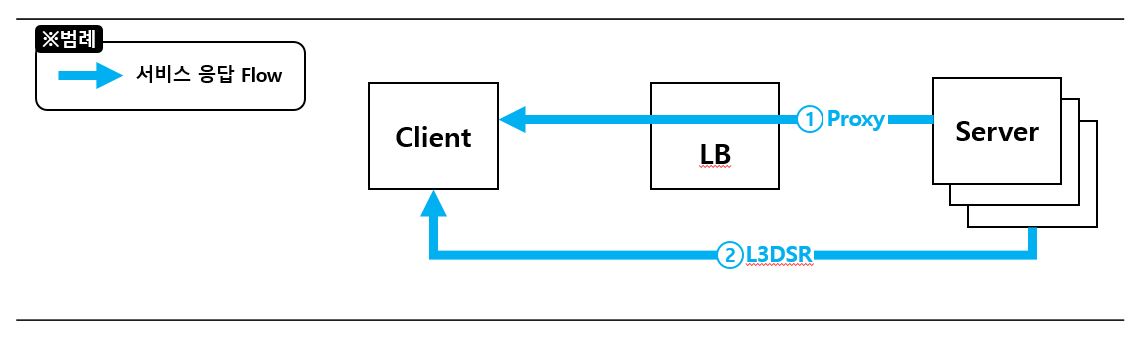

eNLB, an eBPF program operating in kernel space, supports two operating modes based on how the server responds after receiving service request packets* 1 from the client through eNLB, as illustrated in Figure 1. The first mode is Proxy mode, where the server’s response packets are routed through eNLB before being sent to the client. The second mode is L3DSR (Layer 3 Direct Server Return) mode, where the server sends its response packets directly to the client without passing through eNLB. These modes differ not only in how they handle the server's response packets but also in how client service request packets are ultimately delivered to the server via eNLB.

* Packet: It represents the data unit in the TCP protocol. However, in this document, the term 'packet' is consistently used for the data unit of the UDP protocol, which is technically called a 'datagram.

[Figure 1] eNLB Operation Modes Based on Server Response Methods

[Figure 1] eNLB Operation Modes Based on Server Response Methods

Proxy vs. L3DSR - Two Processing Methods

L3DSR load balancing employs IP tunneling between the load balancer and the server to address limitations such as physical circuits and locations. Since it encapsulates the original packet, this method reduces the effective MTU size. In this mode, eNLB uses IP tunneling to forward packets received from the client to the server. Once the server receives the packet, it sends the response packet directly back to the client without routing through the load balancer. This approach ensures that outbound traffic does not pass through the load balancer, reducing resource consumption and maintaining high bandwidth, thereby ensuring low latency.

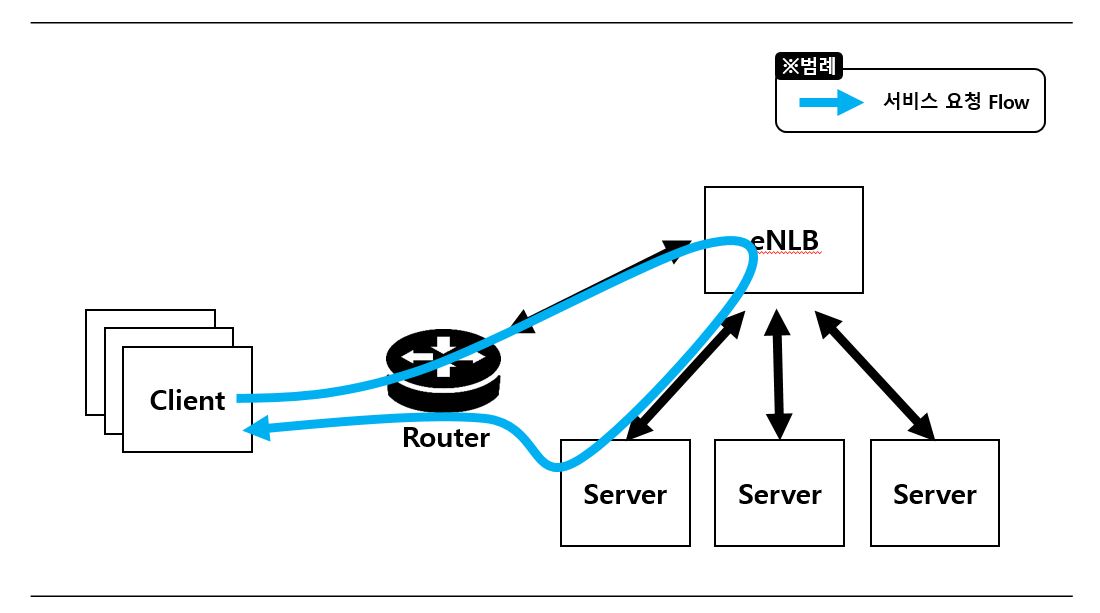

[Figure 2] Packet Flow Diagram in L3DSR Mode

[Figure 2] Packet Flow Diagram in L3DSR Mode

In Proxy mode, the load balancing process operates as follows: As shown in Figure 3, eNLB first determines the server to which the service request packets will be forwarded. It then sends the packets to the server using NAT (Network Address Translation)* Additionally, when the response packets for the service requests delivered to the server are returned to eNLB, NAT is performed to forward them to the client.

When eNLB first receives service request packets sent by the client to the server, it creates an Ingress NAT policy to forward the packets to the designated server and registers this policy in the BPF Map. Simultaneously, eNLB generates and registers an Egress NAT policy to send the response packets from the server, which received the service request packets (via Ingress NAT), back to the client. From this point onward, load balancing is performed by querying the Ingress/Egress NAT policies registered in the BPF Map.

* NAT: A technology that rewrites the source/destination IP address and port number of IP packets to facilitate network traffic exchange

[Figure 3] Packet Flow Diagram in Proxy Mode

[Figure 3] Packet Flow Diagram in Proxy Mode

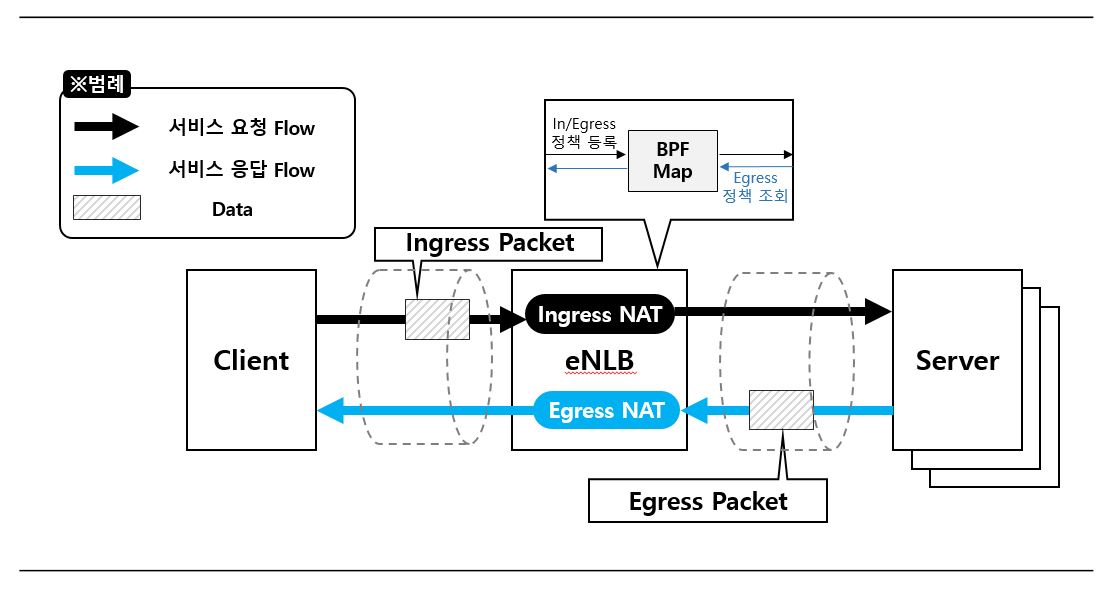

Intelligent Traffic Distribution: Dynamic Load Balancing

A load balancer must not only process incoming packets quickly but also distribute traffic uniformly across all servers. If packets are not evenly distributed and are concentrated on specific servers, service latency increases, and in severe cases, it may lead to server failures.

Maglev Hashing ensures that all servers are included in the lookup table at nearly equal ratios, guaranteeing uniform distribution of client packets across servers. However, it does not address runtime load imbalance issues, such as sudden increases in traffic directed toward specific servers.

L4 load balancers process tens of thousands to millions of packets per second in real-time. Improvements through computationally intensive machine learning or AI models are unsuitable for evenly distributing traffic due to their high computational demands. User traffic changes dynamically, and model-based predictions may lag behind actual data. Therefore, eNLB measures load imbalance across servers during runtime and performs rebalancing based on these measurements.

To ensure uniform distribution of packets, eNLB derives weights for each server and performs load balancing, which improves fairness in the traffic received by each server.

[Figure 4] Packet Distribution Across Servers by Algorithm

[Figure 4] Packet Distribution Across Servers by Algorithm

Performance Experiment - How Fast and Efficient Is It?

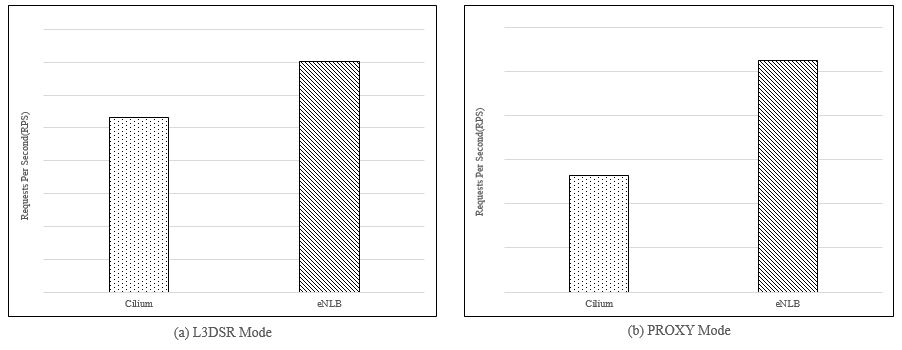

To compare the performance between load balancers, we generated load on eNLB and Cilium, measuring the number of user requests processed per unit time and latency. The benchmark results demonstrated that eNLB processed more packets per unit time than Cilium in both L3DSR and Proxy modes.

[Figure 5] Performance Comparison Between eNLB and Cilium (higher is better)

[Figure 5] Performance Comparison Between eNLB and Cilium (higher is better)

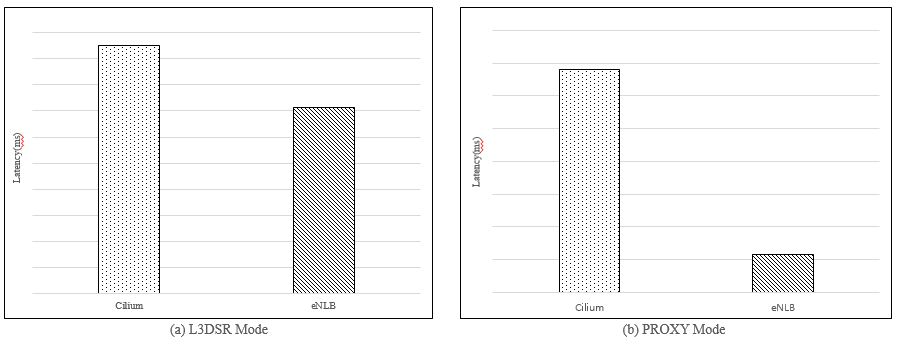

Additionally, we observed reduced average latency in both L3DSR and Proxy modes.

[Figure 6] Latency Comparison Between eNLB and Cilium (lower is better)

[Figure 6] Latency Comparison Between eNLB and Cilium (lower is better)

The significant performance improvement over Cilium was achieved by minimizing the number of BPF Map references during packet processing and directly accessing data using pointers instead of kernel functions. This enabled performance optimization.

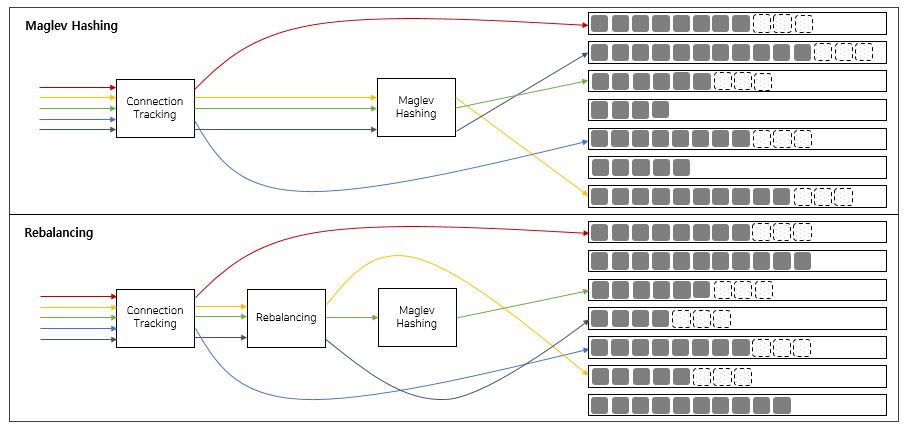

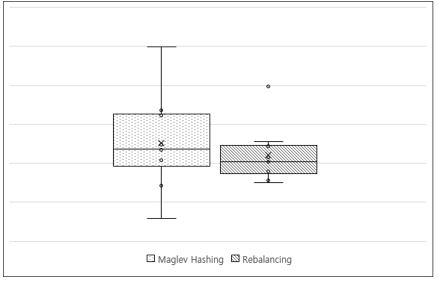

Secondly, to experiment with Dynamic Load Balancing and ensure uniform load distribution across servers at runtime, we conducted tests comparing a control group that implemented only Maglev Hashing with an experimental group that implemented Rebalancing as well.

After generating traffic for a set period, we analyzed the amount of traffic received by each server. The results demonstrated that using Rebalancing in addition to Maglev Hashing allowed the load balancer to distribute packets more evenly compared to using Maglev Hashing alone.

[Figure 7] The distribution of user requests across servers by algorithm

[Figure 7] The distribution of user requests across servers by algorithm

Significance of the Research

This study confirmed that the performance of a software load balancer can be enhanced by processing packets through eNLB, implemented with eBPF at the XDP layer, before they are handled by the kernel's network stack. Additionally, to overcome the limitations of Maglev hashing, the study investigated whether load imbalance occurs at runtime and implemented rebalancing mechanisms to ensure even distribution of load across servers, preventing it from concentrating on specific ones. We hope that these findings will contribute to enhancing the performance of software load balancers.