Which One Is Real? Generating and Detecting Deepfakes

Let’s take a detailed look into what deepfake is.

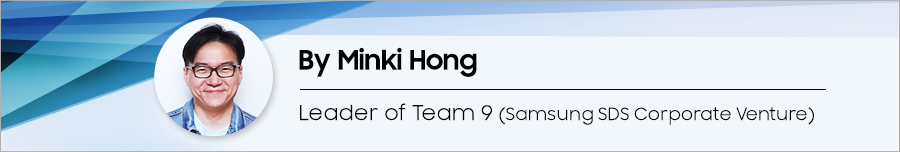

The word “deepfake” is said to have originated from a user’s nickname who uploaded a synthetic image on the US online community Reddit in 2017. However, related technology first appeared in Ian Goodfellow’s paper* in 2014.

* Ian Goodfellow is an AI researcher working at Apple since 2019 after going through Google and Open AI. His famous paper titled ‘Generative Adversarial Nets,’ NIPS 2014 was announced when Goodfellow was at Stanford University (cited deepfake 42,332 times as of the day this article was written).

Generative Adversarial Network (GAN), an already widely known concept in the industry, is an AI model based on Convolutional Neural Networks (CNN) for generating synthetic (fake) images that do not exist in the real world. Afterwards, various related technologies have been further developed by other researchers, creating more sophisticated virtual images.

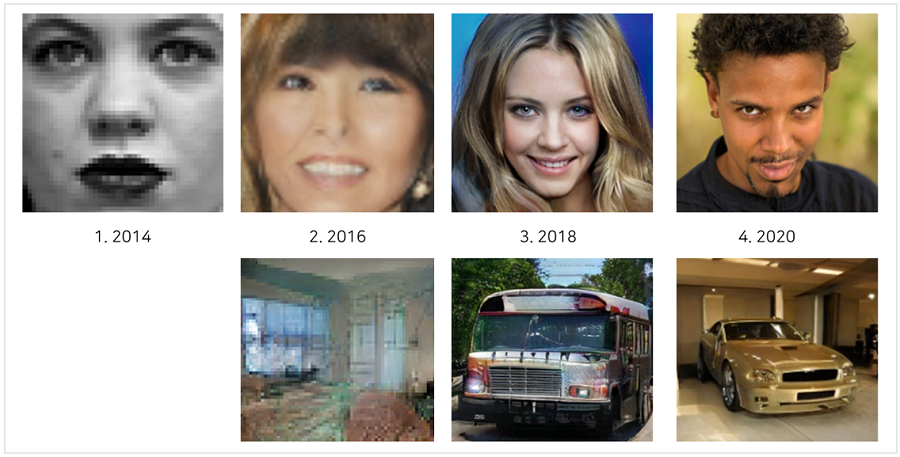

The first step in leveraging AI is machine learning. Machine learning models can learn from the given datasets, identify their features, and carry out the required tasks on their own. Unlike the existing system, AI models find patterns through various features and revise the actions as a result. For instance, a machine learning model can learn lessons such as “I need to cut down on coffee to one cup a day, in order to fall asleep before 12 a.m.”

What’s intriguing is that the methods for machine learning vary by the difficulty and solution of each case. For instance, AI models sometimes need supervision by a human teacher to learn and at other times it can study on its own. As such, machine learning can be categorized into supervised, unsupervised, and reinforced learning, depending on the given case.

Machine learning: Supervised, Unsupervised, Reinforced learning

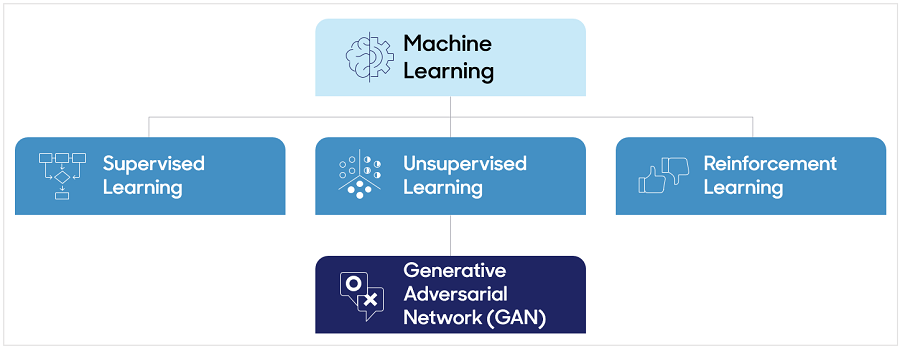

First, let’s learn about supervised learning. It refers to an algorithm that uses the labeled datasets to learn data accurately. For instance, several images of cats and giraffes are provided along with information about which animal is on each image. Through this learning, models can tell whether the chosen image is a cat or a giraffe.

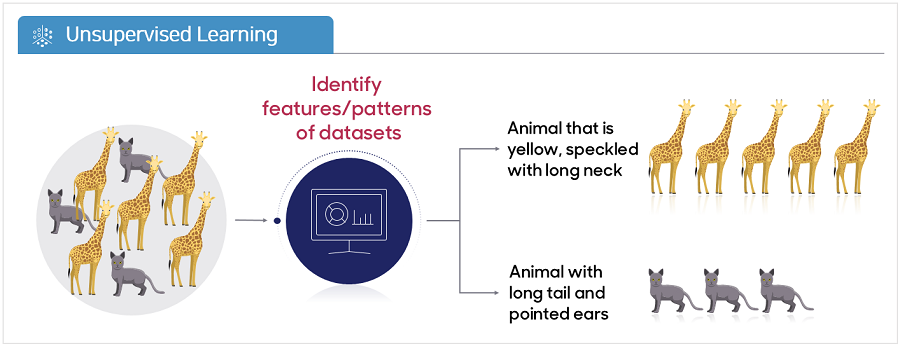

Next is unsupervised learning. Suppose you show photos of cats and giraffes to people who do not know much about the animals. In this case, people can categorize these animal images into those that are long-necked with dark brown spots and on the cream-colored stripes background and those that are not. Likewise, unsupervised learning identifies features or patterns of datasets and categorizes them without labels. The most well-known technology in unsupervised learning is GAN.

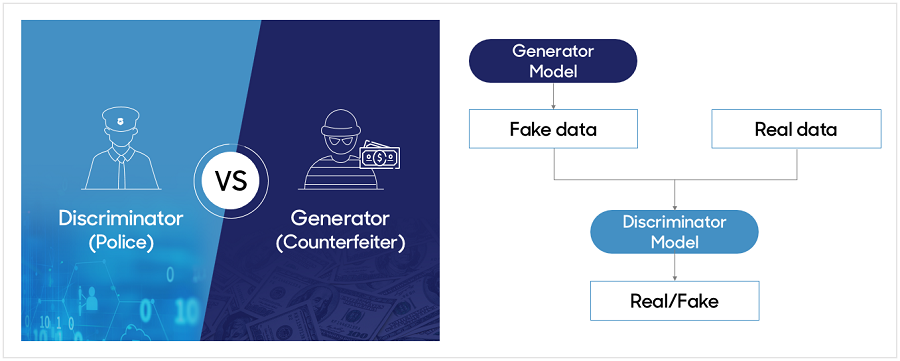

Goodfellow, who suggested GAN for the first time, compared GAN to a game between police officers and counterfeiters*.

* Generative Adversarial Nets (open with Google Chrome or Microsoft Edge browser)

Counterfeiters try to deceive police by making realistic fake currency, whereas the police work to distinguish real and fake money to arrest criminals. As this competitive learning proceeds, the skill of both parties will improve – counterfeiters will be able to make undetectable fake currency while the police will reach a level where they cannot distinguish counterfeit money from the real ones.

The generator can be thought of as an analogy to a team of counterfeiters, while the discriminator is analogous to the police. GAN shows adversarial learning between the generator and discriminator: the former tries to create highly realistic fake data that is almost undetectable, while the latter tries to distinguish the fake data from the real data. Through the repeated process, these two models recognize each other as adversarial competitors. Competition in this game drives both models to improve their skills until the generator can generate the fake data indistinguishable from the real data, and the discriminator cannot distinguish them. In the GAN structure, both models try to lower the probability of each other’s success, thus making each other to enhance further, which eventually leads to the exponential development of data quality.

Deepfake Detection Techniques

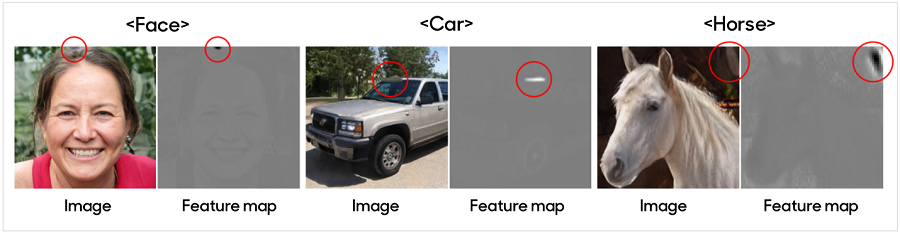

The next topic is the deepfake detection techniques. There are mainly three methods of detecting deepfakes. The first is image-based detection. In the images below, the spots on the woman’s head and those on the windshield and roof of the car are the pixel-level artifacts that are distinctively found only in the fake images.

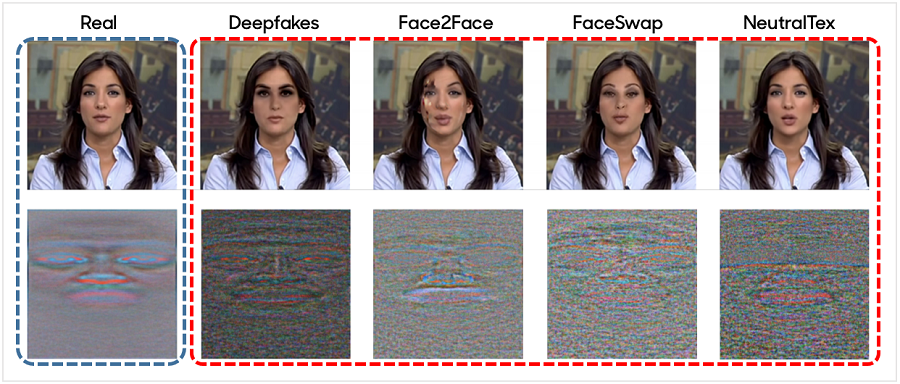

The second is physiological features detection. This approach detects changes in a person’s physical features, such as skin complexion, eye-blinking, and shadows on the face. As you can see from the images below, the computer finds the differences in terms of photoplethysmography (PPG) between the image of a real person and deepfake images.

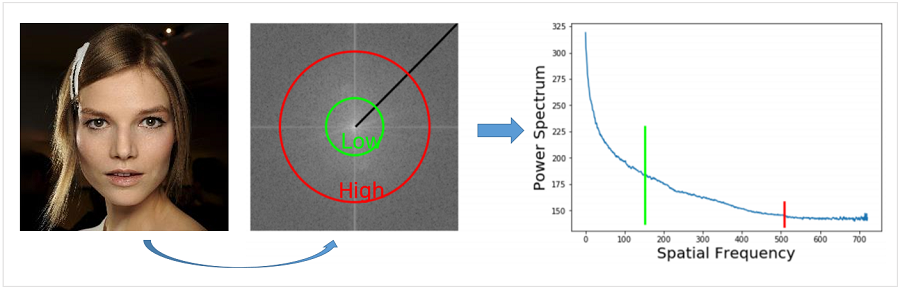

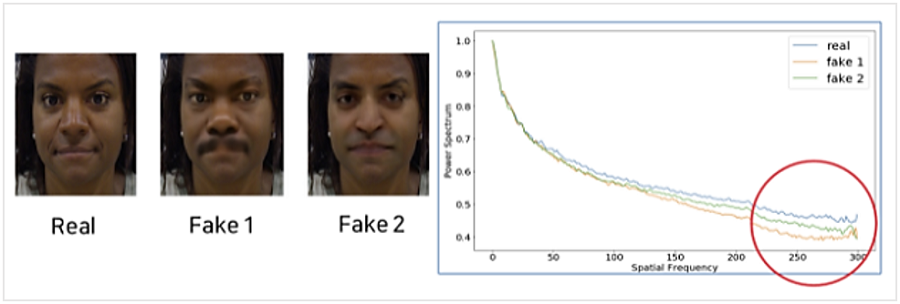

The third is frequency-based detection. In this approach, a photo, which is considered a two-dimensional domain, is converted into a frequency domain through Fourier Transform. The photos are then converted to a one-dimensional power spectrum to observe the difference between the real image and deepfake images. Here, the word “domain” means a specific area or scope, rather than an Internet address.

If you compare the spectrums of the real face and the deepfake, you can see a clear difference in the high-frequency domain.

The next article will explain cheapfakes, which are fake contents created by humans instead of AI models.

※ This article was written based on objective research outcomes and facts, which were available on the date of writing this article, but the article may not represent the views of the company.