Foundational Dynamics for Innovation

Cloud computing has radically changed many industries, and driven the development of new paradigms of software development and delivery. Enterprises and start-ups alike invest in cloud-native architecture to deliver more reliable, resilient services to their customers, reduce costs, and accelerate the pace of innovation.

Three fundamental dynamics drive that innovation:

- Removing the operational burden from development teams,

- Maximizing the operational agility of those teams through automation,

- Building communities of collaboration, which discover and develop new ways to extend existing platforms.

Combined, these establish an environment wherein development teams can focus on the business value they produce, and delivering it faster. By minimizing operational concerns of those teams, an enterprise can free up capacity for developing new solutions, new products, and creating value faster.

While these benefits are clear with public cloud, many enterprises struggle to transform private infrastructure into private cloud with the same capabilities. The core of this transformation is a conversion of cost-center management to value-center innovation. To “think like a cloud” is to adopt this value-center, service-organization paradigm, providing automatic infrastructure management to customers. By identifying and pursuing a few key principles that have made public cloud successful, private cloud initiatives can leverage existing datacenter investments to drive and accelerate innovation.

Pervasive Automation and Self-Service

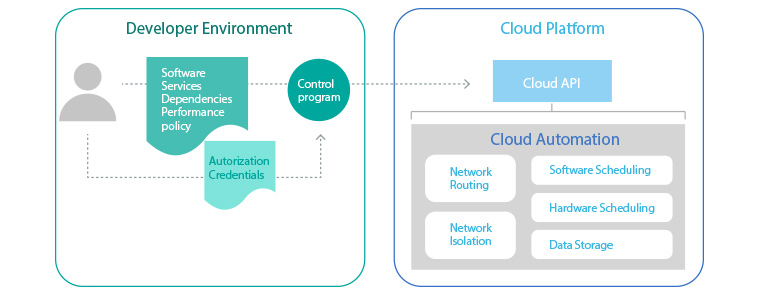

In the cloud, software teams deploy systems autonomously, on platforms that programs alone control, requiring no human interaction. This automation extends all the way up, to the creation of virtual database services, and down, to the creation of virtual networks.

This is done by APIs, which govern capacity on a cloud infrastructure, instead of specifying resources. Infrastructure capacity must be managed as a common pool of computing power, rather than discretely assigned resource. When those teams deploy, the cloud provider API registers demand to activate the software service, and configures resources automatically.

Cloud provider APIs are fundamentally software services, which govern other software and hardware configuration. Software teams rely on APIs to declare how they want their software to run; the cloud provider’s software ensures reliable, resilient provision of that service. The “as a service” paradigm is implemented through these APIs, allowing developers to create all kinds of services, including examples:

- automated virtual machines deployments (e.g. EC2 auto scaling groups)

- databases (e.g. Amazon Aurora on RDS)

- content delivery networks (e.g. CloudFront)

- data object storage (e.g. S3)

- stored-data queries (e.g. Amazon Athena)

- light-weight “serverless” functionality (e.g. Amazon Lambda)

All of these services are extensions on fundamental infrastructure and software automation. Cloud platforms provide API access to developers, to create and manage these services.

Demand by end-users drives the commitment of specific resources to delivering the software’s service; when demand spikes, more resources are automatically rendered available, and when demand declines, those resources are reclaimed. No human operator is involved: it’s governed by policy.

Software teams then focus on only two goals: improving their product with new features and bug fixes, and improving the quality of service by tuning the policy. That policy often declares specific goals: for example, fast response time of their service, and upper limits of operating cost. The cloud platform’s own automation plans the assignment of resources to deliver service based on that policy.

Cloud technology enables them to innovate by relaxing the operational constraints. They develop and deploy faster because they commit less time to thinking about deployment and operations. Their cloud platform removes the operational complexity by letting the developers automate their desired outcomes, giving them the freedom to innovate by easing the creation of new services, and reducing the cost of more frequent deployments.

In a future report, we’ll outline the automation and infrastructure design that delivers this capability with Kubernetes.

Multi-tenancy As a Service Quality Enabler

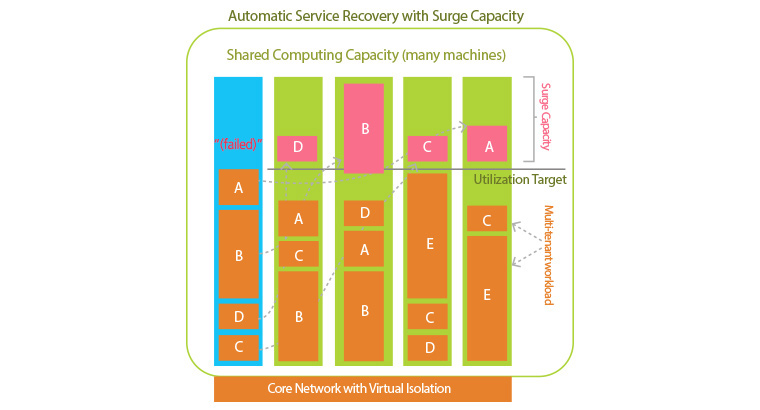

Amazon famously initiated the cloud revolution, when they noticed that their under-utilized data-center investments would yield substantially more value by sharing the infrastructure with paying customers. This fundamental observation is that no two workloads have identical performance demands. Even the same software, used by two different customers, would exhibit different requirements based on time-of-day activity trends. Optimal utilization of a single, generic hardware platform requires balanced performance demands, typically by sharing the capacity across multiple workloads: hence, multi-tenancy.

Cloud platforms also deliver optimal service for their customers, through multi-tenancy. By adopting cloud-native software architectures, e.g. microservices, customer workloads can be distributed across many machines, reducing the impact of failure, and automatically scaling up to meet end-user demand. These microservices usually do not demand the entire capacity of a machine: to enforce single-tenant allocation would be wasteful. While single-tenant applications can still be supported, they constrain utilization and impede leverage by multiple workloads.

Cloud platform automation, and cloud-native software are both designed for failure, so that both can recover from failure automatically. When infrastructure failures occur, the cloud platform reschedules the software workload onto new equipment, and automatically re-configures networking and other resources to restore service operation. Cloud-native software teams design their products to self-heal, so that when their software moves to a new machine (automatically) it resumes operation normally.

With multi-tenant workloads and suitable isolation, the infrastructure investment can be leveraged with more diverse utilization, and the customers benefit from better quality of service to their end-users. All of this is governed by the automation behind the APIs, so software teams can expect excellent performance, while focusing their time on creating value in their software.

In a future report, we’ll describe several design patterns for cloud-native software, including Functions as a Service, the implementation of microservices in Kubernetes, and models for multi-tenant isolation.

Community Collaboration: a Fountain of Innovation

Cloud providers benefit greatly from their customers, who publish designs and working programs, for extending the features of each platform. This knowledge and innovation is often freely shared, in open-source and other community forums. When these communities form and discuss their ideas, cloud providers are often inspired to develop new service offerings, which create new value and establish new markets.

This collaborative innovation is only possible because the cloud platforms, each individually, have established uniform and ubiquitous APIs. These offer a lingua franca, which all developers can use to implement ideas in their own, derivative automation. Documenting and exposing these APIs enables the developer community to innovate on their own, and support new use-cases that the cloud provider has not yet imagined.

When applied to an enterprise private cloud, these APIs (the lingua franca) allow business units to extend a common platform for their own products and business processes. When those are published to the rest of the enterprise, it drives a knowledge-sharing community. This enables new innovations to develop faster, and value to be delivered to customers sooner.

In a future report, we’ll outline the innovative power of open-source, and specific initiatives to foster this collaboration within SDS.

Kubernetes as the Foundation

The Cloud Native Computing Team and the HybridStack initiative are founded on these principles, and Kubernetes is the technological foundation to implement them.

- The Kubernetes community continues to innovate, fostered by several enterprises and many start-ups, and rapidly developing open-source extensions and workflow tools.

- The Kubernetes API is built on a descriptive model of the desired workload, allowing developers to specify their applications in a common language, that’s easily extended for new architectures and workflows.

- Kubernetes itself offers significant multi-tenant management capabilities, with certificate-based user-management and SSO integrations, and extensions to provide strong isolation of multi-tenant workloads.

- All major cloud providers have developed a Kubernetes platform, and the Kubernetes architecture is readily extended to integrate private data center infrastructure.

SDS HybridStack, built on Kubernetes, will soon be the cloud platform of choice, for innovating teams within Samsung.

▶ The contents are protected by copyrights laws and the copyrights are owned by the creator and Samsung SDS.

▶ Re-use or reproduction as well as commercial use of the contents without prior consent is strictly prohibited.

Co author : Engineering Manager, Sam Briesemeister & Sr.Staff Software Engineer, David Watson & Sr.Staff Software Engineer, Matthew Farina

"Sam" joined the SDS Cloud Native Computing Team in 2017 to focus on driving innovation with software teams, using Kubernetes. He has been working with cloud technology teams to deliver products faster, improve processes and customer experience, for over 10 years. Sam is passionate about building open-source innovation cultures.

"David" started his career at IBM developing cluster filesystems (GPFS), and designing and automating the management of many large High Performance Computing (HPC) systems. He has also worked at Amazon on monitoring, predictive analysis, and visualization of hardware performance and reliability in AWS' worldwide Cloud infrastructure. Now at Samsung, he develops Open Source tools and processes to manage the emergent behavior of Cloud Native applications using Kubernetes.

"Matt" works on the Cloud Native Computing Team at Samsung SDS where he focuses on cloud native applications. He is an author, speaker, and regular contributor to open source. He is a maintainer for multiple open source projects and a leader in the Kubernetes community. Prior to joining Samsung, Matt worked for Hewlett-Packard R&D in the Advanced Technology Group where he was one of the leads for what is now HPE OneSphere. Matt has been developing software for over 25 years.

- Innovations in Contents Management to Keep Customers Stay Longer and Visit More Frequently

- Smart Office to Support Business Innovation

- Samsung SDS Earns Recognition as 2023 Asia-Pacific Climate Leaders

- The Chronicles of Cloud Computing

- Finding the Best Cloud Adoption Strategy

- Cloud-First Strategy to Accelerate Digital Transformation