Use cloud native technologies - A case of building a failure analysis system based on containers and ML technologies

- 2022-03-31

- 작성자 Lee Samseop

Cloud native technologies are required to develop and run scalable cloud-based applications. There are multiple cloud native technologies, such as containers, service meshes, microservices, immutable infrastructure, and declarative application programming interfaces (APIs). These technologies are being widely used in business web applications and the development and application of machine learning (ML) models, since a cloud native architecture is designed to embrace rapid changes in environment and code on a large scale. Let’s take a look at a case of automation in a semiconductor failure analysis system using cloud native technologies, like containers and APIs, and time-saving effects on environment configurations.

Concerns over machine learning (ML) model development and its application to work

More time is needed to build a new development environment due to the individual implementation of development platforms

There are many languages, such as Python and R, and libraries for analyzing data and building and training ML models. When data scientists start a new development project, they often need to build both the existing individual development environment and a new one. This process can take more time and is inefficient, causing delays in the progress of the new development project.

Increased burden on data scientists due to tasks involving ML model development and API, and Container

Data scientists go through trial and error, and they spend a lot of time doing additional work. This is due to the fact that they must containerize ML models and provide them as APIs in addition to their primary tasks of data extraction, analysis, model learning, evaluation, and verification.

Difficulties in real-time response including resource expansion for increased analysis data

The increase in workload from larger data and real-time batch processing with ML models, makes it difficult to ensure business continuity due to the inability to rapidly perform scaling out or scaling up.

[Wait!] How can we automate semiconductor failure using AI?

There are three approaches we have taken to resolve these issues. The analysis time and time taken to build development environments have been reduced (from one day to five minutes and from two days to immediately, respectively), and an environment where container technology-based resources are automatically scaled in or out has been configured.

There are three approaches we have taken to resolve this issue. Time for analysis and time for building development environment have been reduced

The solution to automation of machine learning (ML) models

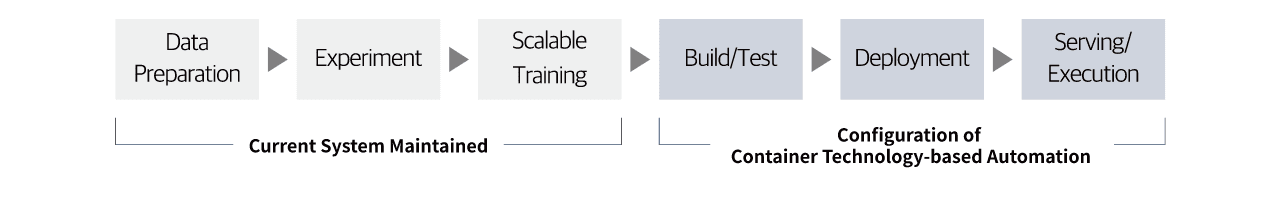

The increase in workload, such as more data and real-time batch processing with ML models, makes it difficult to ensure business continuity due to the inability to rapidly perform scaling out or scaling up. There are three approaches we have taken to resolve these issues. The analysis time and time taken to build development environments have been reduced (from one day to five minutes and from two days to immediately, respectively), and an environment where container technology-based resources are automatically scaled in or out has been configured.

- Data Preperation (Maintain the current system)

- Experiment (Maintain the current system)

- Scalable Training (Maintain the current system)

- Build/Test (Configure automation based on container technologies)

- Deployment (Configure automation based on container technologies)

- Serving/Execution (Configure automation based on container technologies)

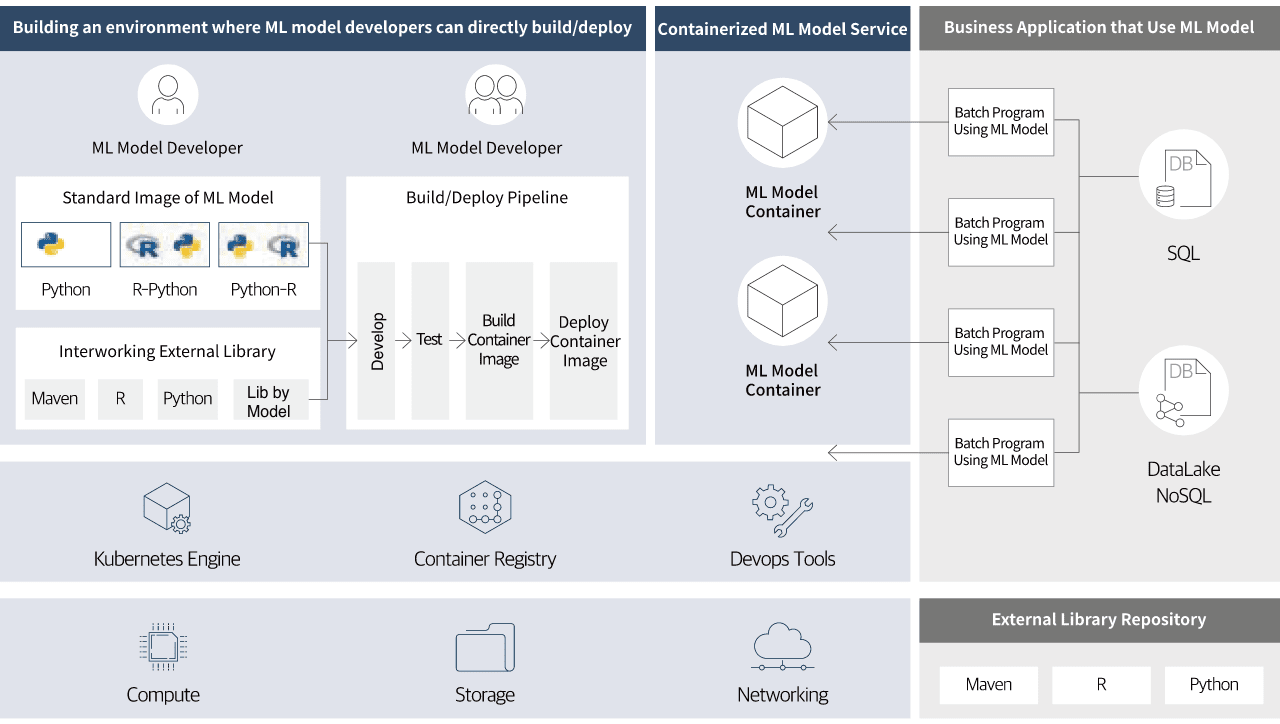

1) Provide standard container images

Container images have been standardized in a two-step process to facilitate ML model development and ML web services. First, standard container images encompassing popular ML development tools like Python, R language and libraries are provided for a rapid development environment. Second, Python-based standard images utilizing uWSGI, Nginx, and Flask, and R-based ones using Nginx and Plumber have been made to automate the deployment of web services. In addition, they are designed to ensure stability by facilitating scaling and auto-healing capabilities within a Kubernetes environment.

2) Build an auto-deployment pipeline

Data scientists can use library repositories based on Python and R easily and safely in a private network with enhanced security. Additionally, they can leverage Jenkins tools to create container images, configure deployment environments, and deploy ML models to these containers.

3) Provide ML models using Kubernetes

Kubernetes for container orchestration, image registry for saving container images, and DevOps tools for building and deployment have been configured as a container-based platform in VM (IaaS). It is a platform (PaaS) environment where developers can provide and manage ML models as services. It allows data scientists to focus on data analysis, ML model development, and learning, leading to stable development operations.

- ML model developer

- ML model developer

- ML model standard image: Python, R-Python, Python-R

- Link external libraries: Maven, R, Python, library of each model

- Build/Deployment pipeline: Development → test → container image builds → container image deployment

- ML Model Container

- ML Model Container

- SQL → Batch program using ML models → ML model container

- SQL → Batch program using ML models → ML model container

- DataLake NoSQL → Batch program using ML models → ML model container

- DataLake NoSQL → Batch program using ML models → ML model container

- Kubernetes Engine

- Container Registry

- Devops Tools

- Compute

- Storage

- Networking

- Maven

- R

- Python

The above was on automation development and building operational environments for data scientists to directly and easily utilize failure analysis ML models as web services. In terms of development, independent virtual execution environments are provided as containers for each model or data scientist to ensure that ML models can be easily modified and verified.

In addition, in terms of operation, the container technology (i.e., Kubernetes’ container orchestration) is used for auto-scaling in/out, auto-healing, and rolling updates. It means web service availability for ML models is secured.

Machine learning (ML) platform and AI&MLOps platform services provided by Samsung SDS

Samsung SDS provides the AI&MLOps platform service, a Kubernetes-based open-source ML platform that supports the automation of complicated ML workflow of model development, model learning, model tuning, model deployment/inference, and model management. It is advantageous for users, as it lets them easily configure and utilize an environment. In addition, users can choose RDB or NoSQL DB for data storage or an API Gateway service to manage RESTful APIs and control access to each IP for more effective development and configuration of operational environments.

Let’s learn more about SCP (Samsung Cloud Platform), cloud service for businesses.

Go to SCP (Samsung Cloud Platform)

Let’s meet AI/ML at Samsung Cloud Platform

-

AI/ML

AI service that easily and conveniently develops ML/DL models and builds learning environments

-

AI&MLOps Platform Kubernetes-based machine learning platform

-

AICR A Service that automatically extracts tests and data from documents

-

- Professional, Lee Samseop / Samsung SDS

- Lee Samseop has been working as a software architect for business solutions, SI projects, etc. before learning about cloud-related technologies and becoming a cloud engineer offering technical support for container/PaaS. Now, he is focusing and working on Samsung Cloud Platform development.