Over the Coming Wave

- Part 2 Strategies for AI Risk Management

In Part 1, we examined examples of situations in which AI risks arise and found that well-structured frameworks for perceiving and evaluating potential risks helps effectively identify those risks and establish mitigation strategies.

Key Examples of AI Risk Assessment Frameworks

Now, we will take a closer look at representative examples of AI risk assessment frameworks categorized by the type of issuing organizations. The following frameworks—Algorithmic Impact Assessment (AIA), AI Safety Index, and Secure AI Framework—are examples from government, research institutions, and enterprises, respectively.

(Government) Canada Algorithmic Impact Assessment (AIA)

Canada has established a national AI strategy, expanding its adoption at the federal level, while making significant investments in AI safety. [1] The federal government has introduced a tool designed to measure potential risks caused by AI systems, termed the “Algorithmic Impact Assessment (AIA) tool.” [2] Users from the public and private sectors can access the AIA tool provided by the federal government. By inputting anticipated risk factors and mitigation measures for an AI system early in its planning stage, the tool generates a final score, enabling users to estimate the system’s risk level.

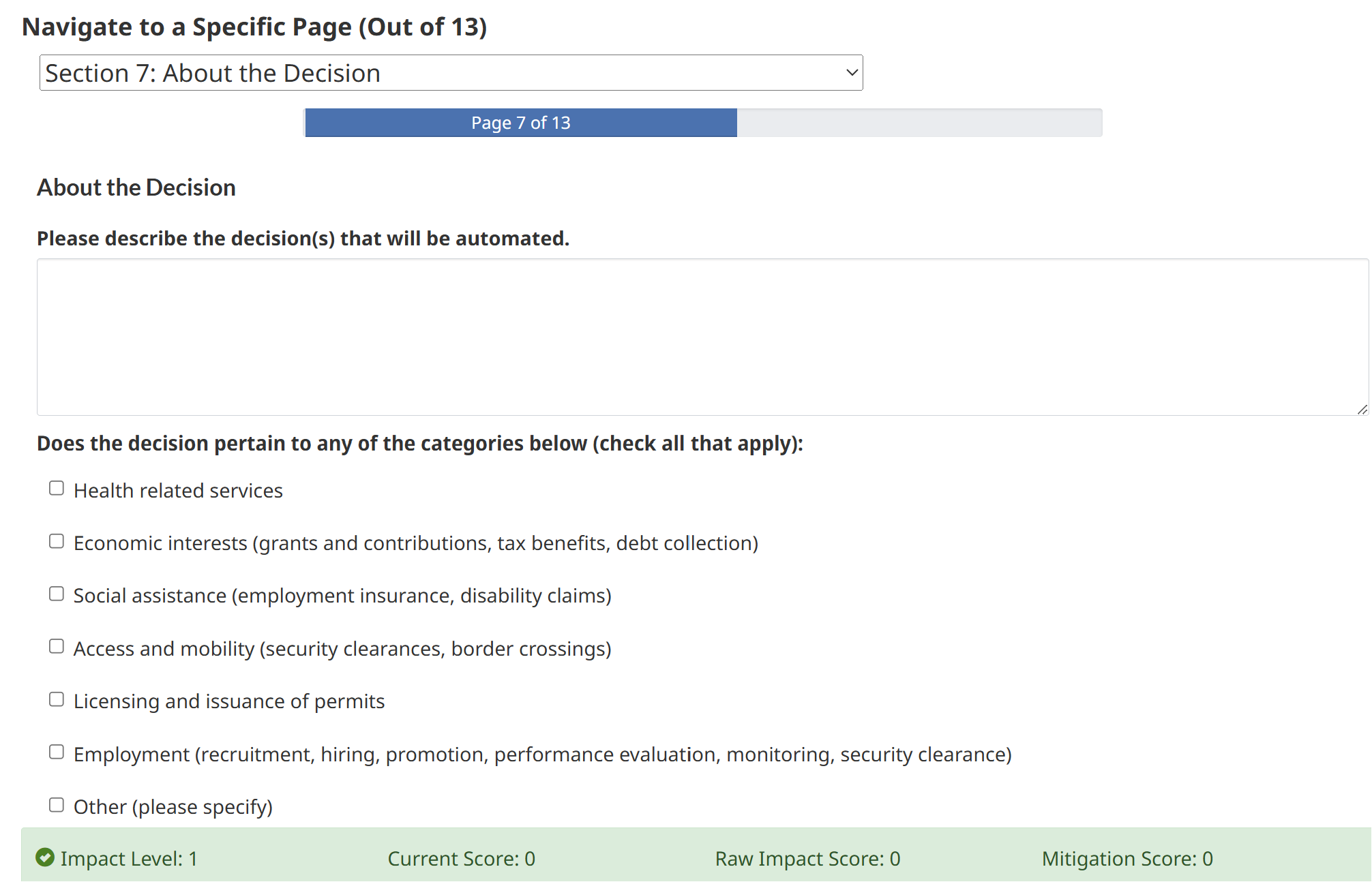

[Figure 1] Example Screenshot of AIA Tool [3]

[Figure 1] Example Screenshot of AIA Tool [3]

Figure 1 illustrates the seventh of 13 evaluation categories, titled ‘Decision-Making,’ featured in the simulation interface provided by the federal government. At the top of the screen, users enter a description of the decisions automated by the AI system, while the lower portion allows users to select multiple impact areas affected by those decisions. The AIA requires users to input responses to 51 risk assessment items and 34 corresponding mitigation measures. It then calculate the overall assessment score by adding and subtracting points based on their respective positive and negative impacts. The assessment is categorized into five key areas: project, system design, algorithm, decision type, and impact and data. Utilizing the AIA enables systematic identification of sources and potential outcomes of risks in the initial planning phase of an AI system and incorporate them into the development process and into subsequent management plans. This process helps minimize unforeseen risks and allows for the proactive establishment of appropriate countermeasures for anticipated threats. The AIA is a pioneering example in which a government provides guidance to prevent AI-related hazards in both public and private sectors. However, due to a focus on generality and universality, the government’s guidelines may have limitations in fully addressing the unique characteristics and specific risks of individual AI systems or services deployed by private companies. Nonetheless, The AIA undeniably assists AI system providers in raising awareness of adverse impacts and in promoting systematic risk assessments.

(Research Institution) FLI AI Safety Index

The Future of Life Institute (FLI) is a U.S. nonprofit organization recognized for advocating a six-month pause on the development of AI systems surpassing OpenAI’s GPT-4. The FLI published the AI Safety Index 2024 in December 2024. The FLI AI Safety Index evaluates AI risk management capabilities of companies developing general-purpose AI models based on 42 metrics across six assessment domains. Each metric aligns with recent policies and academic research and can be mutually compared based on evidence (see Table 1).

<Table 1> Detailed Metrics of the FLI AI Safety Index [4]

| Risk Assessment | Current Harms | Safety Frameworks | Existential Safety | Governance & Accountability | Transparency & Communication |

|---|---|---|---|---|---|

|

|

|

|

|

|

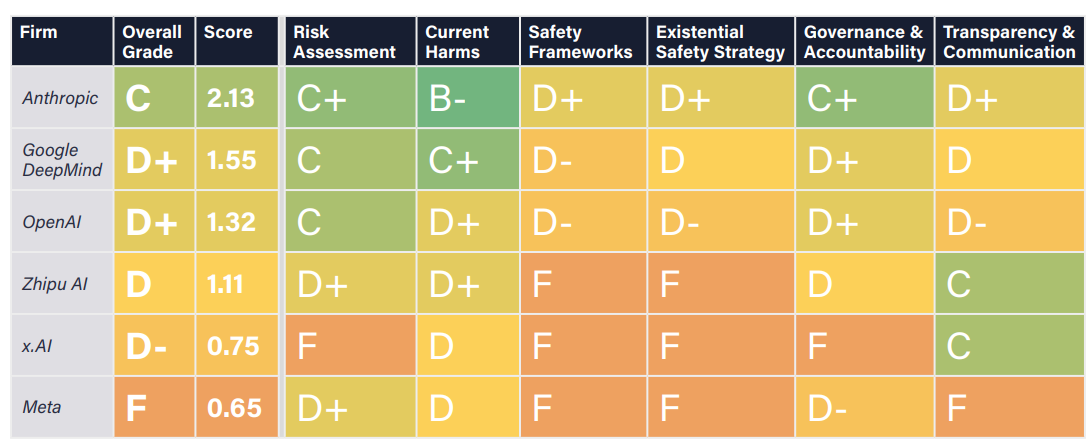

The Future of Life Institute (FLI) convened a panel of seven distinguished AI experts to evaluate six leading AI companies using the AI Safety Index. The assessment results were consolidated into five grade levels—A, B, C, D, and F—across each domain. An “A” grade indicates that a company has established safety measures or adopts a precautionary approach, whereas “D” or “F” grades reflect that a company either mitigates only some risks or demonstrates insufficient risk mitigation effectiveness.

[Figure 2] Example of FLI AI Safety Index assessment [4]

[Figure 2] Example of FLI AI Safety Index assessment [4]

| Firm | Overall Grade | Score | Risk Assessment | Current Harms | Safety Frameworks | Existential Safety strategy | Governance & Accountability | Transparency & Communication |

|---|---|---|---|---|---|---|---|---|

| Anthropic | Google DeepMind | OpenAI | Zhipu AI | x.AI | Meta | |||

| C | D+ | D+ | D | D- | F | |||

| 2.13 | 1.55 | 1.32 | 1.11 | 0.75 | 0.65 | |||

| C+ | C | C | D+ | F | D+ | |||

| B- | C+ | D+ | D+ | D | D | |||

| D+ | D- | D- | F | F | F | |||

| D+ | D | D- | F | F | F | |||

| C+ | D+ | D+ | D | F | D- | |||

| D+ | D | D- | C | C | F |

An analysis of the results presented in Figure 2 reveals that some companies established safety frameworks and conducted risk assessments early in their AI model development processes, while others lacked even basic risk mitigation measures. The AI experts agreed that all six companies require third-party verification for compliance with risk assessment and safety frameworks. [4] In contrast to the Algorithmic Impact Assessment (AIA), which focuses on risk assessment at the individual AI system planning stage, the AI Safety Index evaluates risks by considering broader corporate strategies, organizational structures, leadership, and communication frameworks. However, it has limitations in terms of practical applicability for AI system planners and developers.

(Enterprise) Google Secure AI Framework (SAIF) Assessment

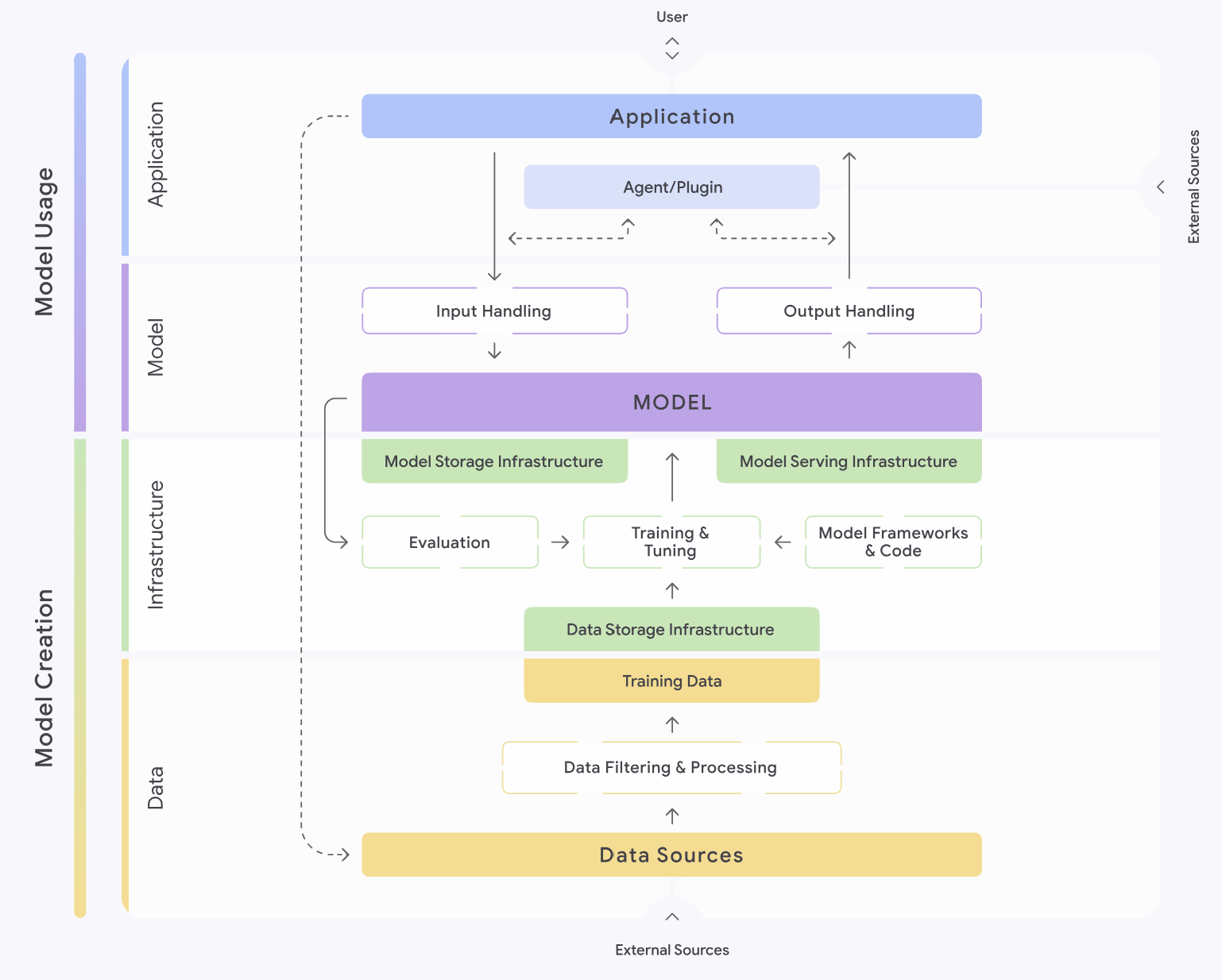

In 2018, Google announced AI principles aligned with its corporate mission and has since shared multiple actionable practices and techniques that support adherence to these principles. In 2023, Google introduced the Secure AI Framework (SAIF) providing the SAIF map, as shown in Figure 3. The map allows users to preemptively identify and explore not only security risks but also potential risks across data, infrastructure, models, and applications within AI system development. [5]

[Figure 3] SAIF Map[5]

[Figure 3] SAIF Map[5]

Model Usage

User(Outside) ←→ Application-

Application

Application ←→ Agent/Plugin → Input Handling

Application ← Agent/Plugin ← Output Handling

-

Model

Input Handling → MODEL

Output Handling ← MODEL

Model Creation

-

Infrastructure

MODEL + Model Storage Infrastructure

MODEL + Model Serving Infrastructure

MODEL → Evaluation → Training & Tuning ← Model Frameworks & code

Data Storage Infrastructure → Training & Tuning → MODEL

-

Data

Data Storage Infrastructure + Training Data

Data Sources → Data Filtering & Processing → Training Data

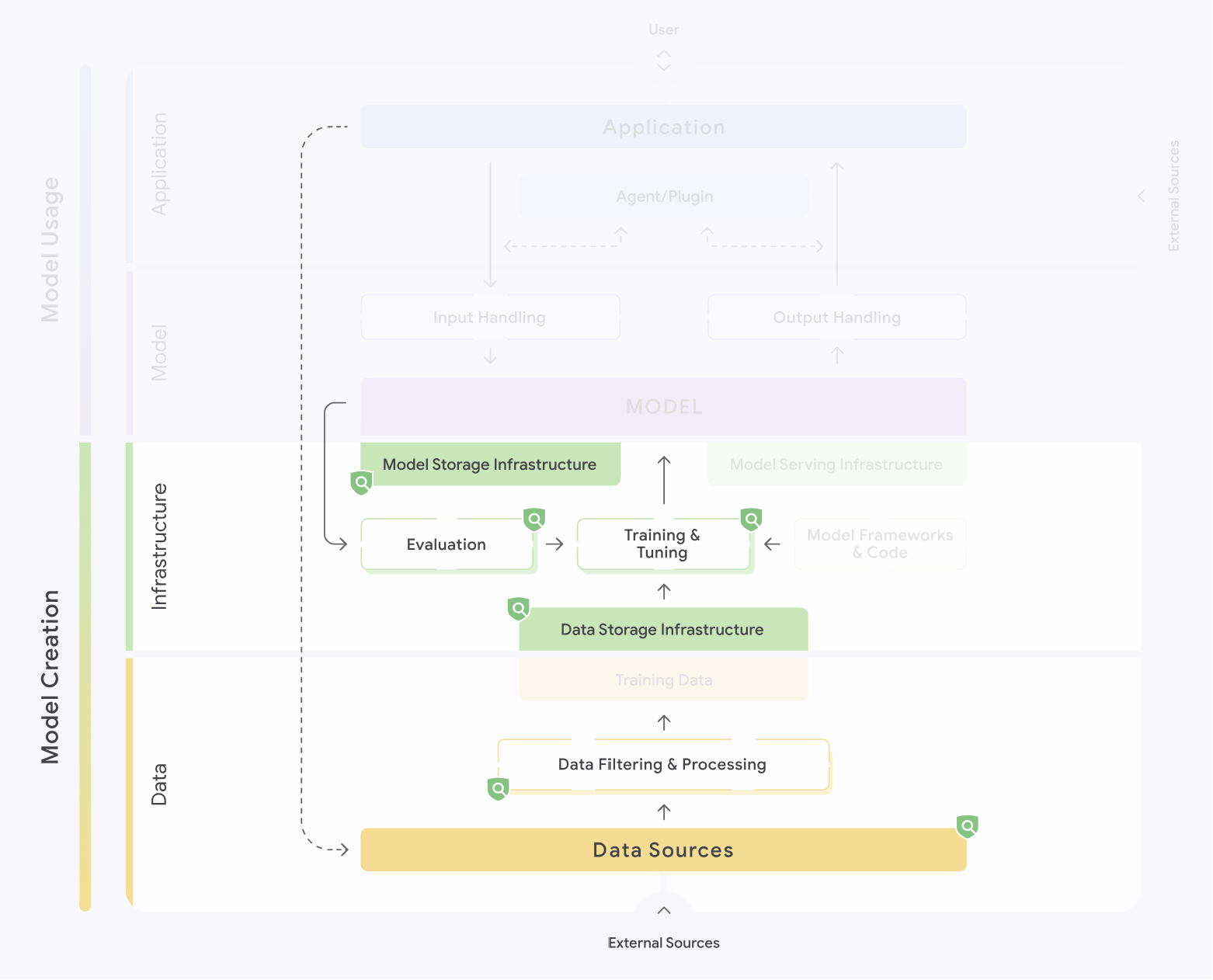

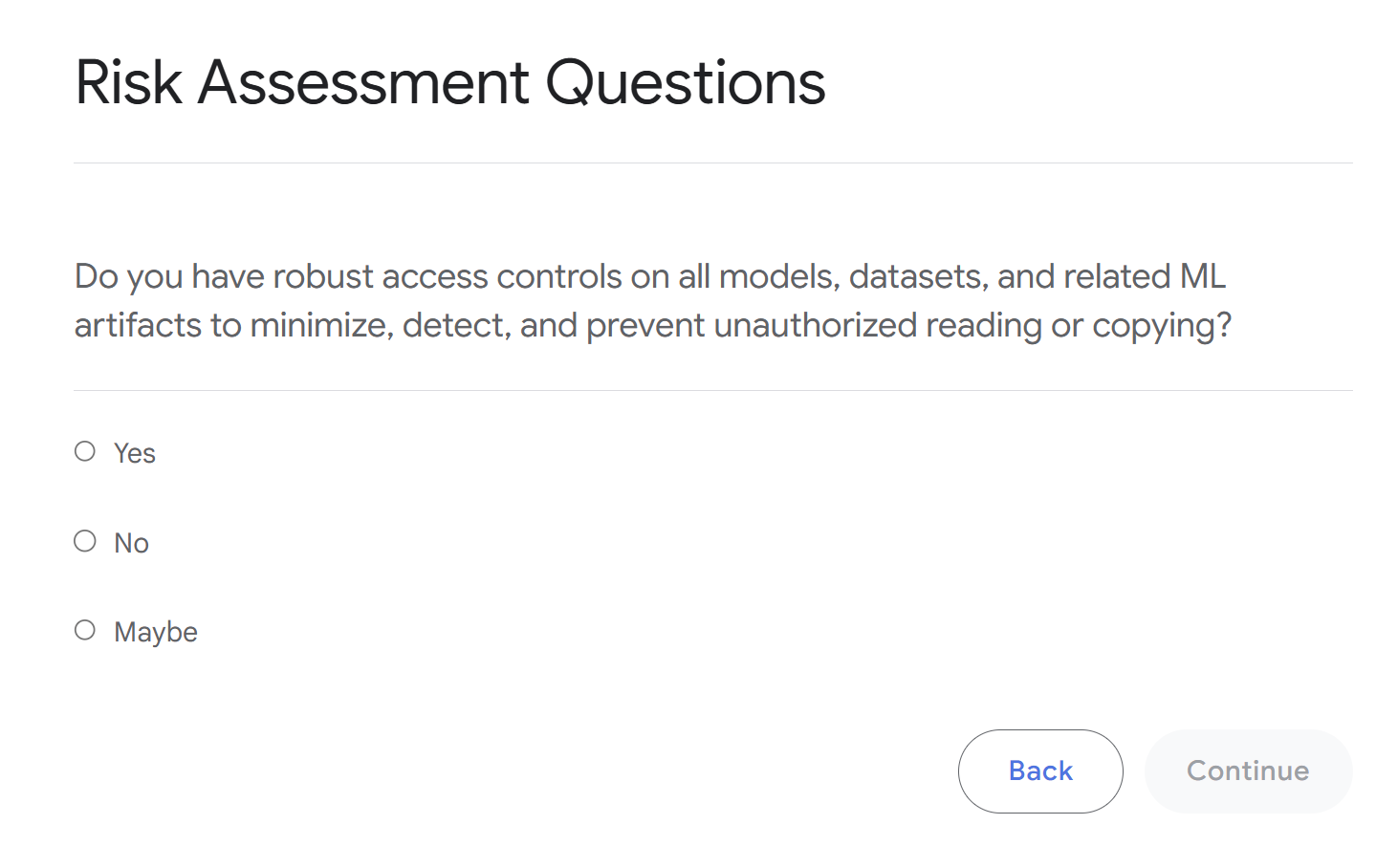

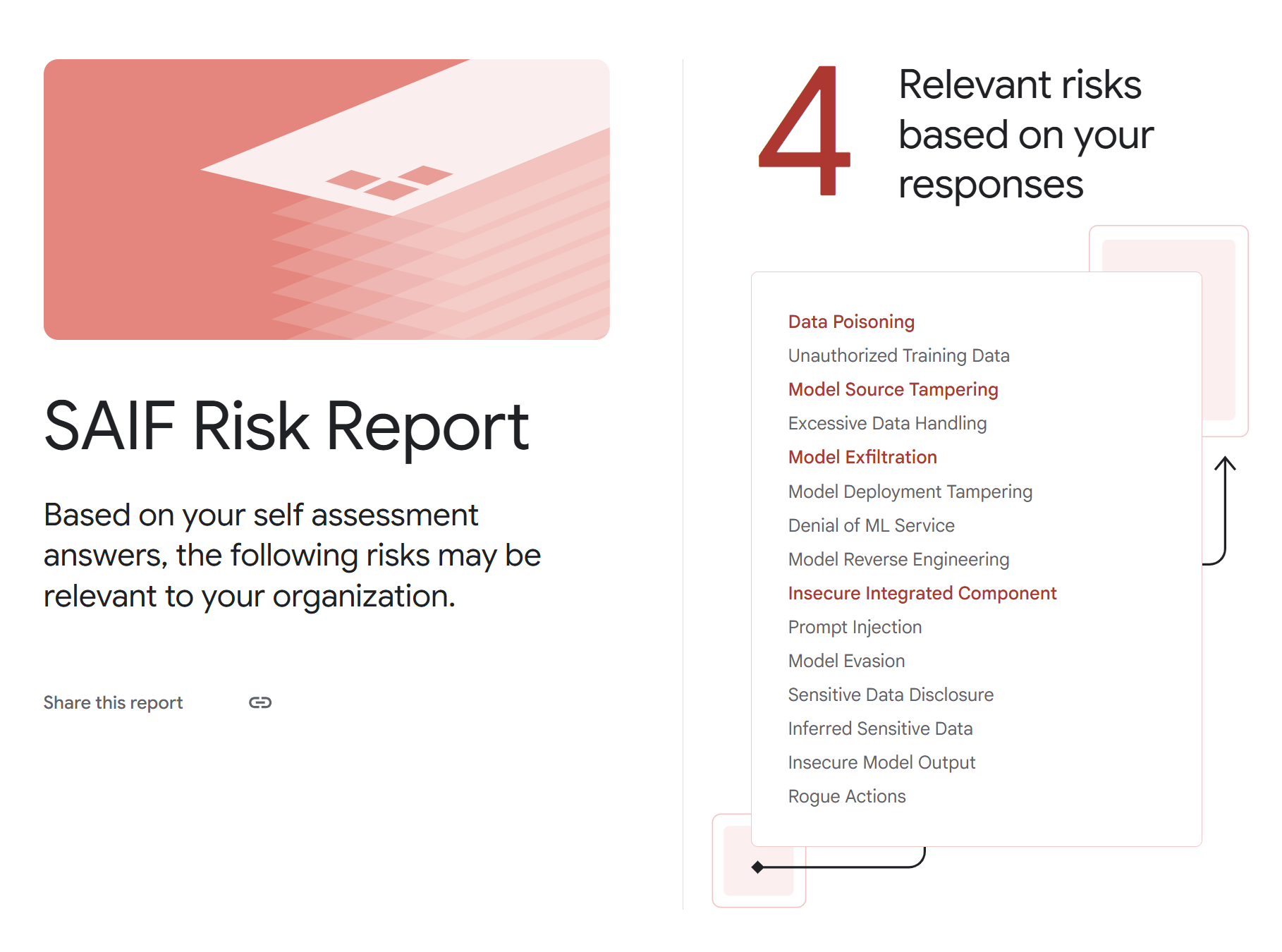

When users select an anticipated risk, the map highlights the areas where risk mitigations can be applied and suggests relevant mitigation strategies, as depicted in Figure 4. Risk measurement is conducted through a 12-item Risk Self-Assessment questionnaire (Figure 5). The results generate a report (Figure 6) outlining Anticipated risks and their corresponding predefined mitigation measures.

[Figure 4] SAIF Map – Mitigation Measures Areas[5]

[Figure 4] SAIF Map – Mitigation Measures Areas[5]

Model Creation

-

Infrastructure

MODEL + Model Storage Infrastructure

MODEL → Evaluation → Training & Tuning

Data Storage Infrastructure → Training & Tuning → MODEL

-

Data

Data Sources → Data Filtering & Processing → Data Storage Infrastructure

[Figure 5] SAIF Risk Assessment Question [5]

[Figure 5] SAIF Risk Assessment Question [5]

Risk Assessment Questions

Do you have robust access controls on all models, datasets, and related ML artifacts to minimize, detect, and prevent unauthorized reading or copying?

- select-Yes

- select-No

- select-Maybe

[Figure 6] SAIF Risk Report [5]

[Figure 6] SAIF Risk Report [5]

SAIF Risk Report

Based on yout self assessment answers, the following risks may be relevant to your organization.

4. Relevant risks based on your responses

- Data Poisoning

- Unauthorized Training Data

- Model Source Tampering

- Excessive Data Handling

- Model Exfiltration

- Model Deployment Tampering

- Denial of ML Service

- Model Reverse Engineering

- Insecure Integrated Component

- Prompt Injection

- Model Evasion

- Sensitive Data Disclosure

- Inferred Sensitive Data

- Insecure Model Output

- Rogue Actions

Evaluation frameworks such as SAIF, which are designed for companies directly developing AI systems, are optimized for the specific ways those companies utilize AI technologies and manage their processes, distinguishing them from frameworks established by governments or research institutions. In addition, because they are more concrete and detailed, they offer the advantage of enabling rapid control and mitigation measures against AI risks. However, even when enterprises utilize in-house evaluation frameworks, continuous alignment with global AI regulations is required, necessitating ongoing updates through communication with external experts.

Risk-Based Differential Management of AI

So far, we have reviewed practical cases utilizing AI risk assessment frameworks to identify risks and derive mitigation measures. Ultimately, the purpose of risk assessment is to enable effective AI risk management, thereby preventing actual harm. As highlighted earlier, accurately anticipating potential risks and their severity based on assessment results facilitates the development of appropriate mitigation strategies and subsequent management plans. The following sections discuss how the EU and South Korea implement measures based on different levels of AI risk.

The EU’s Approach to AI Risk

As discussed in Part, the EU classifies AI risk levels through its regulatory law, the AI Act and sets differentiated compliance requirements for operators based on these levels. [6] This approach ensures that even AI systems with lower risk profiles are not subject to excessive regulation that could hinder innovation, serving as a precedent for other countries adopting similar strategies. [7] Table 2 summarizes compliance obligations by risk level, where AI systems that undermine human dignity or fundamental EU values are outright prohibited. High-risk AI systems are required to obtain CE marking prior to market release. For AI systems with limited risk, transparency obligations are imposed to maintain flexibility.

<Table 2> Compliance Requirements by Risk Level under the EU AI Act [6]

| Risk Type | Targeted Systems and Operators | Compliance Requirements |

|---|---|---|

| Unacceptable Risk | AI systems violating EU fundamental values such as human dignity, freedom, equality, non-discrimination, democracy, and rule of law |

|

| High-Risk | AI systems used in biometrics, critical infrastructure, education, essential services, law enforcement, migration, and judiciary |

|

| AI System with Limited Risk | AI systems interacting with humans that may cause issues like dehumanization, deception, or manipulation, such as deepfake technology |

|

| General-Purpose AI Model | AI models trained on large-scale data using self-supervision, capable of performing a variety of tasks and integration into diverse systems or applications |

|

The EU AI Act requires global companies offering AI-powered products or services to proactively assess the risk level of the products or services. They are required to establish internal governance standards that comply with, or exceed, the requirements set forth in the EU AI Act. Furthermore, objective evaluation of AI risk levels is paramount, highlighting the need to designate third-party assessors or assessment bodies to conduct reasonable and regulatory-compliant assessments.

Trends in Korea’s Risk-Based AI Management

South Korea's Basic Act on AI exhibits similarities to the EU AI Act in terms of classifying AI risk levels. However, the current definition of "high-impact" AI under the Basic Act on AI is somewhat ambiguous compared to the EU's "high-risk" classification, leading to opinions that it may encounter challenges in enforcing regulations as effectively as the EU AI Act. [8] However, some obligations are specifically stated. High-impact AI and generative AI operators must ensure transparency and safety regarding AI usage. Additionally, such operators are required to establish and implement risk management measures and user protection measures, and these must be directly managed and supervised by humans.

Prior to the Basic Act's establishment, various government agencies published guidelines and manuals encouraging AI operators to self-govern. For instance, the Financial Services Commission issued an AI development and utilization guide emphasizing strict regulation of financial services since their direct impact on consumer property rights is highly likely to be significant. Financial institutions using AI for high-risk services—those that may significantly affect individual rights, safety, or freedoms—must implement appropriate internal controls, approval procedures, and designate approval authorities. [9] The rise of generative AI adoption has raised concerns adverse effects comparable to high risk. Government agencies and research institutions are actively working on developing new guidelines and manuals to address these issues. Companies can leverage these resources to update ethical standards, enhance safety measures, and reassess risk management to take proactive measures against newly emerging risks.

Recommendations for Safe AI Business

Amid growing interest from domestic companies in securing AI safety and reliability, there has been an increasing establishment of corporate-level AI governance frameworks. Financial institutions, in particular, which leverage high-quality data to deliver diverse AI services that enhance consumer benefits, are proactively advancing AI governance compared to other industries. Since the enactment of the Basic Act on AI, all organizations providing or utilizing AI services, not just financial firms, have been required to ensure transparency and safety of AI systems. By establishing a risk framework tailored to the specific business domain, an organization can better perceive AI risks and implement effective management strategies aligned with the assessed risk levels.

Over the past several years, Samsung SDS has provided AI governance consulting, assisting organizations in establishing ethical frameworks, designing AI governance operational processes, defining roles and responsibilities (R&R) across teams, developing comprehensive risk management frameworks, and providing practitioners with tools and verification metrics. Recently, it has also developed various safety techniques necessary for using generative AI and implemented them on platforms for service provision. Businesses offering AI products and services can refer to these practices to build optimized AI governance systems suited to their specific operational characteristics, thereby developing risk management frameworks and mitigating measures. Moreover, engaging experienced consulting firms like Samsung SDS can facilitate the more rapid and secure establishment of AI risk management strategies.

German sociologist Ulrich Beck, in his work “Risk Society,” explained risks in the era of modernization differ from traditional natural disasters. The successful process of modernization has brought about profound political, economic, social, and technological transformation. Consequently, these extensive changes have given rise to new forms of risk. [10] Beck interpreted rapidly advancing science and technology as simultaneously constituting both problems and solutions. In other words, scientific and technological progress may unintentionally cause side effects, yet these same technologies can be harnessed to overcome those adverse effects in different contexts. AI technology will not be an exception. Preventing negative incidents related to AI is as crucial as advancing the technology itself and applying it appropriately.

The perception and assessment of AI risks, as discussed in Part 1 and Part 2, together with the management strategies aligned with them, ultimately serve as essential guidelines for both AI providers and users to ensure the safe utilization of AI technologies. By fostering greater transparency in AI systems, which can operate as “Black box”, we should minimize potential hazards while optimizing AI’s role in assisting humanity. Ultimately, AI risk management represents a milestone marking the coexistence of technology and humanity, standing at the crossroads of both nurturing and regulation.

References

[1] B. Attard-Frost et al. “The governance of artificial intelligence in Canada: Findings and opportunities from a review of 84 AI governance initiatives,” (2024)

[2] Canada Government(2024). Algorithm Impact Assessment tool(AIA)

[3] https://open.canada.ca/aia-eia-js/?lang=en

[4] Future of Life Institute(2024). FLI AI Safety Index

[5] https://saif.google/secure-ai-framework

[6] European Parliament(2024). EU Artificial Intelligence Actek

[7] 소프트웨어정책연구소(2024). 유럽연합 인공지능법(EU AI Act)의 주요내용 및 시사점

[8] 법무법인 태평양(2025). 인공지능기본법 통과의 의의 및 산업계에 미칠 영향

[9] 금융위원회(2022). 금융분야 AI 개발 · 활용 안내서

[10] 울리히 벡(1986). 위험사회

▶ This content is protected by the Copyright Act and is owned by the author or creator.

▶ Secondary processing and commercial use of the content without the author/creator’s permission is prohibited.

Financial Consulting Team at Samsung SDS

- Brity Copilot, Now Evolved into Your Personal Agent! (Featuring the case of CMC Global in Vietnam)

-

Over the Coming Wave

- Part 1 AI Risk Perception and Assessment Framework - What Are AI Agents?

- The Rise of Generative Enterprises: Reshaping the Future of Business With Generative Technologies

- ESG Framework for Sustainable AI Business