Over the Coming Wave

- Part 1 AI Risk Perception and Assessment Framework

“Article 33 (Confirmation of High-Impact AI) AI business operators providing AI-based products or services must review in advance whether the AI in question qualifies as high-impact AI. If necessary, they may request confirmation from the Minister of Science and ICT as to whether the AI qualifies as high-impact.”

“Article 34 (High-Impact AI Business Operators' Responsibilities) AI business operators providing high-impact AI or AI-based products and services must implement the following measures as prescribed by presidential decree to ensure the safety and reliability of the high-impact AI.”

- Basic Act on the Development of Artificial Intelligence and the Establishment of Foundation for Trustworthiness

In December 2024, the Basic Act on the Development of Artificial Intelligence and the Establishment of Foundation for Trustworthiness (“AI Basic Act”) was passed at the plenary session of the National Assembly. This has been interpreted as placing emphasis on the advancement of the artificial intelligence industry, while also providing minimum measures to safeguard users. [1]

DeepMind co-founder Mustafa Suleyman compared the advancement of AI technology to a kind of historical "wave." He pointed out that AI has given humanity new power but can also cause unprecedented disruption and instability. Mustafa, also mentioned that while we increasingly rely on AI technologies, we are simultaneously facing growing threats. It is now time to consider how we should confront the unstoppable coming wave. The following incidents demonstrate how these threats appear in everyday lives.

Example from the AI Business Perspective

After astonishing the world following ChatGPT with its release in January, 2025, DeepSeek suspended its service in Korea on February 15, less than a month after launch. With this, Korea has become the fifth country in the world to restrict the use of DeepSeek, following Italy, Taiwan, Australia, and the United States. Personal Information Protection Commission (PIPC) has sent an official inquiry regarding the collection and processing of personal data to DeepSeek, which is suspected of excessively collecting users’ personal information, recommending improvements and enhancements in accordance with the Personal Information Protection Act. DeepSeek admitted that it had paid insufficient attention to offshore regulations. [2]

Example from the AI User Perspective

Privacy is not the only potential risk that can arise from AI. Recently, Morgan & Morgan, the largest personal injury law firm in the United States, faced controversy after submitting a fabricated court ruling generated by ChatGPT. It turned out that eight of the nine precedents cited by lawyers were found to be fabricated and not present in legal databases. [3] The use of generative AI without proper verification raised doubts about the reliability of both the law firm and AI. This incident happened again after a similar legal case involving passengers on an Avianca Airlines flight in New York State became a major issue in 2023. This demonstrates that risks arising from the use of AI in the highly sophisticated field of law do not easily disappear.

As such, with repeated damages caused by AI, encouraging businesses to voluntarily ensure the safety and reliability of AI systems through laws and policies can be understood as establishing minimum safeguards for users while not hindering the development and utilization of technology.

This article shifts the focus from advancing and applying AI technologies to examining the risks associated with AI, impacts on businesses leveraging AI, and preparations they should make.

What AI Risks Should We Focus On?

AI risk is a function of the probability and the severity of potential harm caused by systems utilizing AI technology. AI systems can have positive or negative impacts, and the outcomes can present opportunities or threats to individuals and society. [4] When the impacts are negative and pose a threat, we generally consider them high risk.

AI requires different approaches from other information technologies in terms of risks. AI systems are designed to operate by mimicking human decision-making, and at times, their behavior can be difficult to distinguish from that of humans. Therefore, ethical issues arise that were previously only applicable to humans. Technically, there is a black-box problem because AI algorithms are not as transparently observable as the code or components of traditional information technology. This leads to negative effects on our daily lives and, in some cases, causes serious harm to the environment, energy, and society.

Both the EU AI Act and the Colorado AI Act, which were drafted before Korea’s AI Basic Act, categorize AI that poses a significant risk of harm as “High-Risk” AI. However, Korea’s AI Basic Act uses the less familiar term “High-Impact” AI. The high-impact AI is a comprehensive and neutral term that takes into account not only immediate risks but also potential impacts in the future by encouraging AI business operators to responsibly manage both the negative impacts such as high risks and positive effects with a significant social impact. [6] Furthermore, the AI Basic Act specifies the obligations for the high-impact AI and generative AI business operators. Even if enforcement is weak, AI operators conducting the high-impact AI business are required to conduct prior review and implement appropriate safety measures. Accordingly, AI risk assessment that evaluates potential risk levels has become a crucial task.

AI Risk Assessment Approaches

Then, is the function of AI risk truly derivable? If the risk level is determined through assessment, what actions can businesses take? To answer these questions, we explore various approaches to risk perception, risk assessment frameworks, and management strategies.

AI Risk Perception

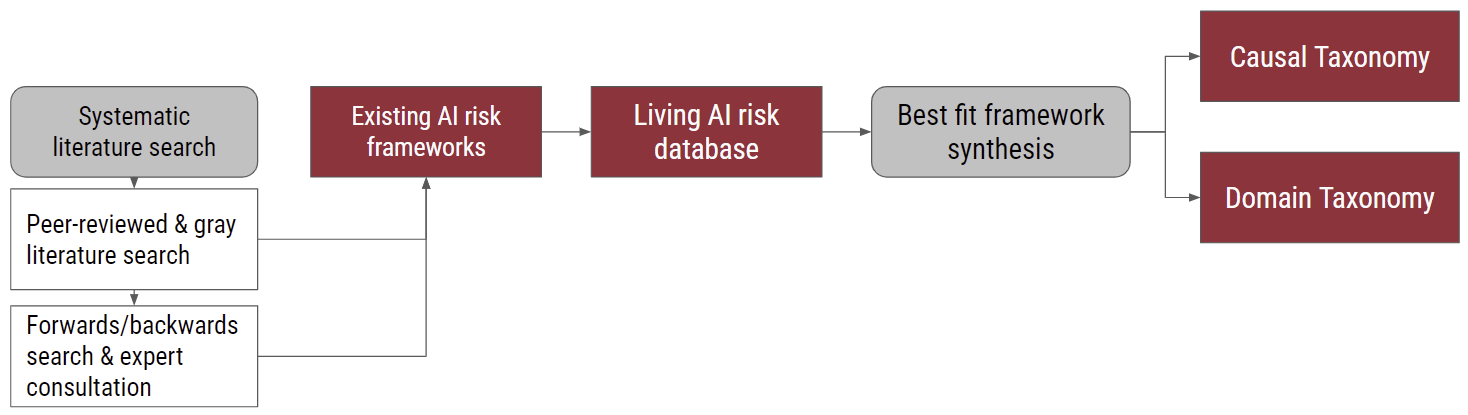

We have discussed the need for a preliminary review to determine whether AI businesses fall under the category of “High Impact” or “High Risk”, as well as the need to prepare appropriate safety measures. So, how are such risks identified? A study systematically analyzes various studies and expert opinions on methods for measuring risks and concludes that there are two perspectives on risk perception. [7] One approach focuses on the causes and origins of the risk, while the other centers on the consequences or damages caused by the risk (see Figure 1).

[Figure 1] Method for Perceiving AI Risks[7]

[Figure 1] Method for Perceiving AI Risks[7]

-

- Systematic literature search

- Peer-reviewed & gray literature search

- Forwards / backwards search & expert consultation

- Existing AI risk fameworks

- Living AI risk database

- Best fit framework synthesis

-

- Causal Taxonomy

- Domain Taxonomy

When focusing on causes, AI risks can be broken down as follows.

- What? (Entity): Whether AI risks can be cause by humans, AI, or other entities

- Why? (Intent): Whether the risks occurs intentionally or unintentionally

- When? (Timing): Whether the risk occurs before or after deployment and operation

By structurally analyzing the occurrence of risks, we can more accurately diagnose their causes and implement feasible improvements in the future.[7] Another approach categorizes risks into areas such as discrimination, privacy and security, misinformation, malicious actors, misuse, human-computer interaction, socio-economic and environmental harm, AI system safety failures, and limitations. [7] Perceiving risks through this approach enables quick assessment of the specific harm caused to individuals, society, or companies and the scale of that harm. Furthermore, it will become easier to establish proactive measures to prevent such harm. Organizations using AI can utilize risk perception frameworks as a valuable foundation for comprehensively assessing risk exposure and management, which in turn helps determine actions for risk mitigation. [7]

AI Risk Assessment Frameworks

Discussions have continued regarding the use of frameworks for the common perception and assessment of AI risks. In particular, major countries in North America, Europe, and Asia have released frameworks for AI risk management led by governments, NGOs, and academia (see Table 1). A common element among these frameworks is that AI is considered to be autonomous and adaptable, similar to humans, and thus responsibility is emphasized for people creating AI, urging that AI systems should be developed and used for the benefit of humans, society, and the environment—this is referred to as the Responsible AI (RAI) principle. [8]

<Table 1> AI Risk Assessment Frameworks [8]

| Framework | Country/Region | Time | Institution |

|---|---|---|---|

| Algorithm Impact Assessment tool(AIA) | Canada | 2019 | Government of Canada |

| Secure AI Framework(SAIF) | U.S.A. | 2023 | |

| MAS Veritas Framework | Singapore | 2019 | Monetary Authority of Singapore |

| FLI AI Safety Index | U.S.A. | 2024 | Future of Life Institute |

| Fundamental rights and algorithm impact assessment(FRAIA) | The Netherlands |

2022 | Ministry of the Interior and Kingdom Relations (BZK) |

| NSW artificial intelligence assurance framework | Australia | 2022 | NSW Government |

| Model rules on impact assessment of algorithmic decision-making systems used by public administration | EU | 2022 | European Law Institute (ELI) |

| Artificial intelligence for children toolkit | International | 2022 | World Economic Forum (WEF) |

| Responsible AI impact assessment template | U.S.A | 2022 | Microsoft |

| Algorithmic impact assessments(AIAs) in healthcare | The UK. | 2022 | Ada Lovelace Institute |

| AI Risk Management Framework(AI RMF) | U.S.A. | 2022 | National Institute of Standards and technology (NIST) |

AI risk assessment frameworks help identify risks and formulate appropriate mitigation measures, but they have limitations. In striving for general applicability, they often fail to cover industry or application-specific issues, limiting their scope. [8] Some frameworks, such as Concrete and Connected AI Risk Assessment (C2AIRA), suggest mitigation strategies by risk type, [8] but most frameworks leave the responsibility for specific measures to users.

Despite such limitations, the frameworks can serve as useful and complementary tools if their pros and cons are understood. In addition, users and companies can gain well-structured reference models for risk assessment and management through the frameworks. Here are some cases.

Singapore MAS Veritas Framework

In Singapore, Monetary Authority of Singapore (MAS) launched a consortium called Veritas, involving financial institutions, consulting firms, and big tech companies. Through a three-phase project, Veritas published 14 principles on FEAT (Fairness, Ethics, Accountability, Transparency), which are essential to AI and data analytics, along with evaluation methods, toolkits, and application cases.[9]

In the Veritas framework, the first stage of risk management involves assessing AI systems based on complexity, materiality, and impact, which results in assigning a risk tier. The risk tier is considered the risk level. According to this risk tier, FEAT measurement and management methodologies can be customized. The official documents provide checklists for measuring each FEAT principle and action plans for identified risks.

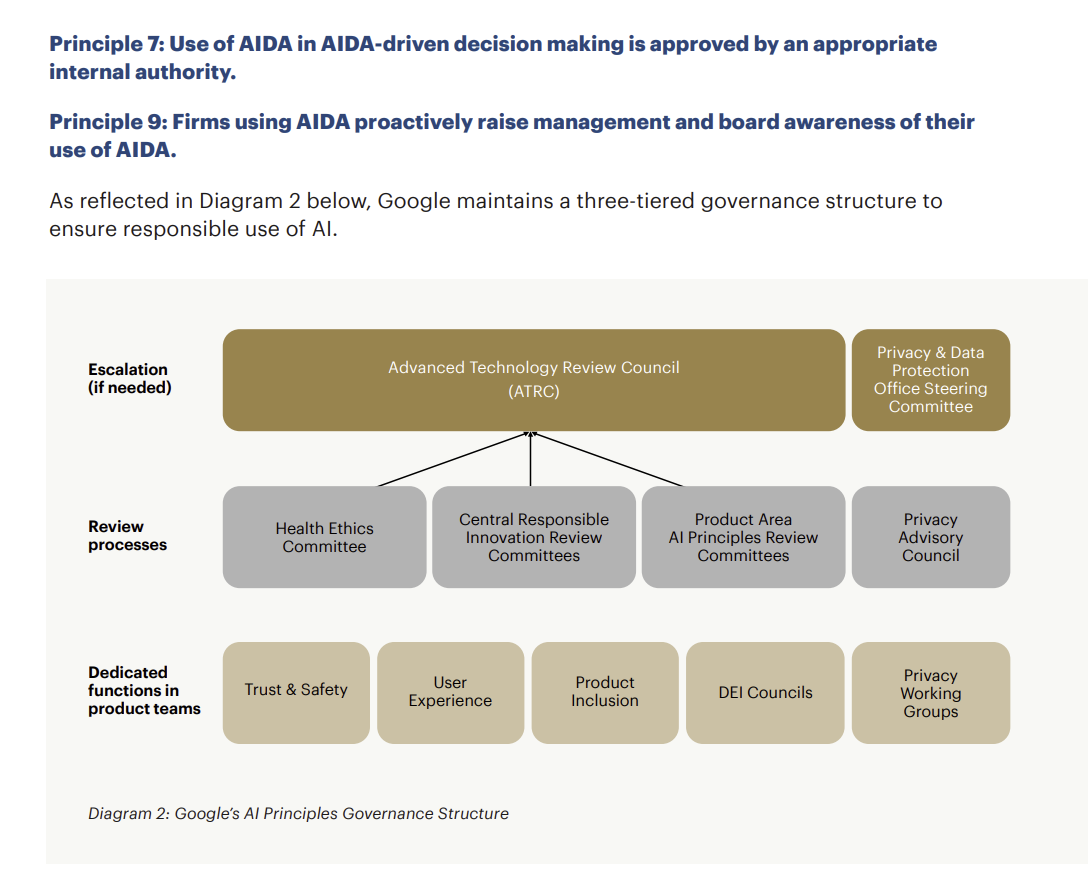

The FEAT methodology was applied to Google Pay’s fraud detection case in India. [10] As shown in Figure 2, Google’s three-tier AI governance structure is mapped to Principle 7 (“Use of AIDA (Artificial Intelligence and Data Analytics) in AIDA-driven decision-making is approved by an appropriate internal authority”) and 9 (“Firms using AIDA proactively raise management and Board awareness of their use of AIDA.”).

[Figure 2] Google Pay’s Three-Phase AI Governance Structure and FEAT Principles10]

[Figure 2] Google Pay’s Three-Phase AI Governance Structure and FEAT Principles10]

Principle 7 : Use of AIDA in AIDA-driven decision making is approved by an appropriate internal authority.

Principle 9 : Firms using AIDA proactively raise management and board awareness of their use of AIDA.

As reflected in Diagram 2 below, Google maintains a three-tiered governance structure to ensure responsible use of AI.

Diagram 2: Google's AI Principles Governance Structure

- Escalation(if needed)

-

-

Advanced Technology Review Council(ATRC)

- Health Ethics Committee

- Central Responsible Inovation Review Committees

- Product Area AI Principles Review Committees

- Privacy & Data Protection Office Steering Committee

-

Advanced Technology Review Council(ATRC)

- Review processes

-

- Health Ethics Committee

- Central Responsible Inovation Review Committees

- Product Area AI Principles Review Committees

- Privacy Advisory Council

- Dedicated fucntions in product teams

-

- Trust & Safety

- User Experience

- Product Inclusion

- DEI Councils

- Privacy Working Groups

The bottom of Figure 2 shows Google product team, which includes teams responsible for user experience, privacy, trust, and safety. On a higher level, dedicated teams check processes. This structure provides evidence matching the Principle 7 and 9. The Veritas Framework’s significance lies in providing both governmental and private sector-driven verification methods for AI and data analytic solutions to financial institutions. By sharing real-world cases, furthermore, it encourages wider adoption of fairness assessment methodologies.

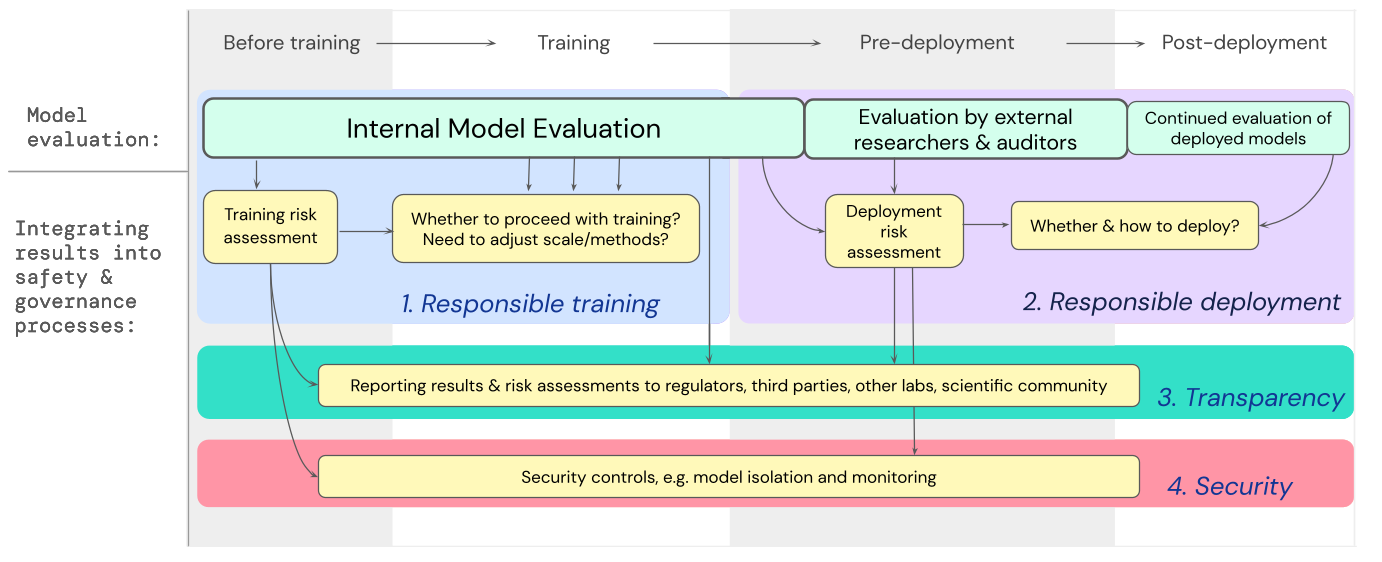

Google AI Safety & Governance Process

Google’s AI Safety & Governance Process includes assessment methods for “extremely” risky models such as generative models. [11] Generative models have the characteristics of general-purpose models because they create images, text, and sounds, which are not limited to a specific service and can be applied across diverse industries and services. Hence, the potential damage from general-purpose models can be enormous and misused or misaligned general-purpose models can become extremely risky models. [11] Accordingly, various attempts have been made to evaluate such risks. For example, the Alignment Research Center, founded by a former OpenAI researcher, has been developing a method to measure self-diffusion of language models. [12] In addition, the GPT-4 red team tested GPT-4’s cybersecurity functions. [13] As shown in Figure 3, Google’s process for assessing extremely risky models is largely divided into three steps, with the distinguishing feature being that external independent verification teams repeatedly check for reliability from early stages of development. The internal developers, who are most familiar with the model’s context, perform the internal model evaluation while reporting plans to independent external monitoring teams from the learning plan stage to ensure transparency. [14]

[그림 3] Google AI Safety & Governance Process[14]

[그림 3] Google AI Safety & Governance Process[14]

| Before training | Training | Pre-deployment | Post-deployment | |

|---|---|---|---|---|

| Model evaluation | Internal Model Evaluation | Evalution by external researchers & auditors | Continued evalutation of eployed models | |

| Integrating results into safety & governance processes | Training risk assessment | Whether to proceed with training? Need to adjust scale/method | Deployment risk assessment | Whether & how to deply? |

| Reporting results & risk assessment to regulators, third parties, other labs, scientific community | ||||

| Security controls, e.g. model isolation and monitoring | ||||

After development and before deployment, researchers or auditors (the “third party”) conduct a model evaluation. The third party may access the model via API[15] and function as the red team. Finally, external auditors assess the model to make the ultimate judgement on deployment safety, enhancing objectivity and transparency. [16] This approach is notable for fundamentally eliminating potential risks throughout every stage of model development, enabling the management of potential risks starting from the planning stage.

So far, we have examined why assessing AI’s potential risks has become increasingly important and explored approaches to risk perception and assessment. We also saw that international efforts are underway to establish socially accepted and standardized risk assessment methods via frameworks. Additionally, real-world applications, such as the Veritas Framework and Google’s Safety & Governance Process, demonstrate how frameworks are used during AI planning and development.

In Part 2, we will explore specific cases of AI risk assessment in practice and the AI risk management measures currently being implemented or discussed in the EU and Korea.

References

- Shin & Kim(2024). National Assembly passes the AI Basic Act

- PIPC(2025). DeepSeek Temporarily Suspends Its Application Service in Korea

- NIST(2023). AI Risk Management Framework

- Colorado General Assembly(2024). Consumer Protections for Artificial Intelligence: Concerning consumer protections in interactions with artificial intelligence systems

- P. Slattery et al. “The AI Risk Repository: A Comprehensive Meta-Review, Database, and Taxonomy of Risks From Artificial Intelligence.” (2024)

- B. Xia et al. “Towards Concrete and Connected AI Risk Assessment (C2AIRA): A Systematic Mapping Study.” (2023)

- Monetary Authority of Singapore(2023). MAS Veritas Framework

- Monetary Authority of Singapore(2023). FEAT Principles Assessment Case Studies

- Google DeepMind. “Model evaluation for extreme risks.” (2023)

- OpenAI(2023). GPT-4 system card

- D. Raji et al. “Closing the AI accountability gap: Defining an End-to-End Framework for internal algorithmic auditing.” (2020)

- E. Bluemke et al. “Exploring the relevance of data Privacy-Enhancing technologies for AI governance use cases.” (2023)

- J. Mökander et al. “Auditing large language models: a three-layered approach.” (2023)

▶ This content is protected by the Copyright Act and is owned by the author or creator.

▶ Secondary processing and commercial use of the content without the author/creator’s permission is prohibited.

Financial Consulting Team at Samsung SDS