Backgrounds of Trustworthy AI System1

As AI systems replace decision-making and activities traditionally done by humans, it brings social interest and controversies on whether ethics for humans can be applied to AIs. AI systems with relatively easy-to-understand reasoning processes and experts assisting it in decision-making and measures, such as expert systems, are mainly used internally and focused on expanding their performances and application.

* AI system: Software developed by machine learning, logic, knowledge-based and statistical approaches, etc., to create influential products for their surroundings, including content, predictions, recommendations, and decision-making through interactions for human-defined purposes (AI, H., 2019)

However, the development of the 5G wireless communication network enabling quick information spreading, greatly improved GPU in numerical reasoning speed, and AI learning algorithms which are commonly referred to as deep learning, accelerated the development and spread of the new AI system replacing humans to directly deal with general consumers.

However, the system becomes complex enough for general people to fail to understand its reasoning behind its decisions (the so-called black box issue) and this increases disbelief in AI activities. AI even has to face the changes in social norms, highlighting fairness and transparency.

Developed countries in terms of AI technologies, such as the EU and the United States, see the new social agenda to ask for legal accountability for AI, followed by Korea with upcoming new laws and guidelines announced by the Ministry of Science and ICT, Korea Communications Commission, and Financial Services Commission. As such, the era of AI ethics regulations has now really started.

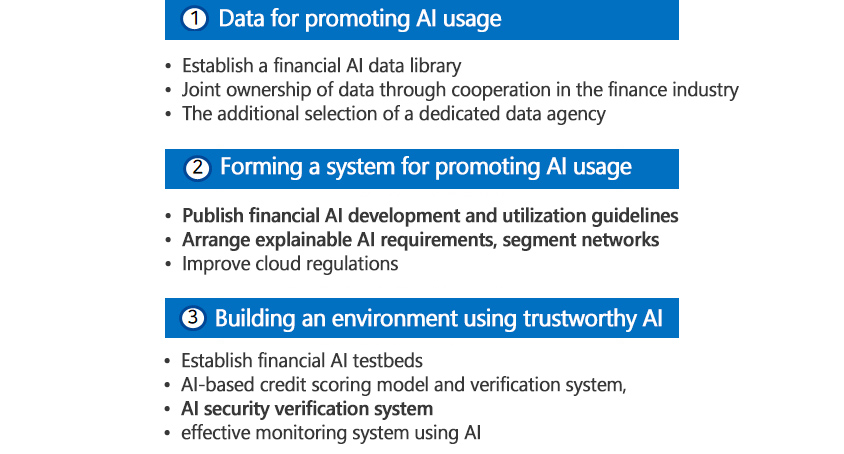

- Establish a financial AI data library, joint ownership of data through cooperation in the finance industry, and the additional selection of a dedicated data agency

- Publish financial AI development and utilization guidelines, arrange explainable AI requirements, segment networks, and improve cloud regulations

- Establish financial AI testbeds, an AI-based credit scoring model and verification system, an AI security verification system, and an effective monitoring system using AI

AI system and ESG management

As circumstances are changed at home and abroad, conglomerates and big tech companies providing AI-based services to general consumers are preparing AI ethics and AI system development based on such ethics. This is closely related to the ESG management many companies seek.

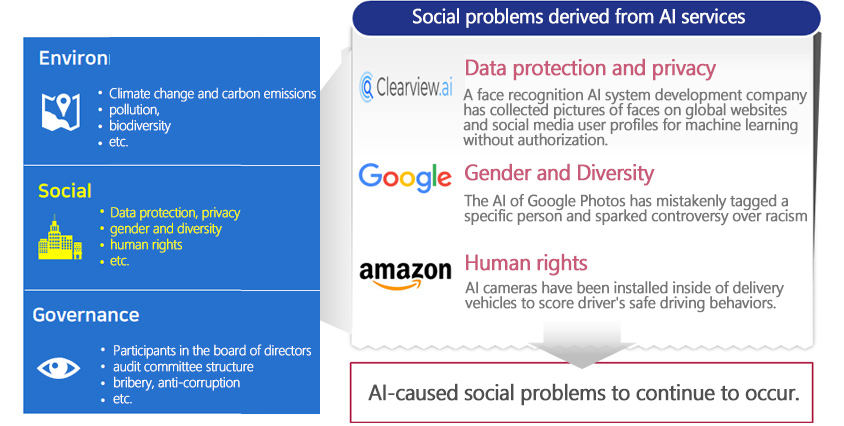

Like the examples of [Figure 2], in terms of the ESG management, “social” represents the social responsibilities of companies to protect individual's privacy and to prohibit unfair discrimination based on where an individual belongs. AI services significantly breach these principles if they discriminate against individuals due to their gender and country of origin, etc.

- Environmental - Climate change and carbon emissions, pollution, biodiversity, etc.

- Social - Data protection, privacy, gender and diversity, human rights, etc.

- Governance - Participants in the board of directors, audit committee structure, bribery, anti-corruption, etc.

- Clearview.ai - Data protection and privacy: A face recognition AI system development company has collected pictures of faces on global websites and social media user profiles for machine learning without authorization.

- Google - Gender and Diversity: The AI of Google Photos has mistakenly tagged a specific person and sparked controversy over racism

- amazon - Human rights: AI cameras have been installed inside of delivery vehicles to score driver's safe driving behaviors.

- → AI-caused social problems to continue to occur. / The importance of AI ethics is highlighted

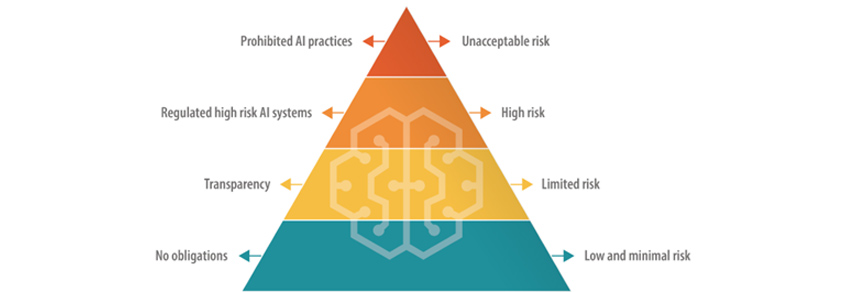

Today, most leading digital companies offer recommendations on products and services for individuals’ preferences, and the range of the recommendations only expanded and grew from chatbots to AI voice consultants, loan reviews to abnormal transaction detection, and facial recognition to autonomous driving. Some problems may greatly impact an individual's privacy and even life. The EU has set additional regulations on such high-risk AI systems. [Figure 3]

- Prohibited AI practices - / -> Unacceptable

- Regulated high risk Ai system - / -> High risk

- Transparency - / -> Limited risk

- No obligations - / -> Low and minimal risk

In particular, the EU established strong regulations through the AI Act4 under the consent procedure for its member states. The act clearly states that if providers, importers, and distributors of high-risk AI systems that may negatively impact human health/safety/inalienable rights, do not comply with the regulations, they are banned from release, import, or distribution.

If companies fail to be fully aware of and prepare for the risks in their AI systems, then what would their mistakes bring? I will take a few examples of this. A well-known example is a chatbot ethics problem of a startup in December 2020 in Korea.

The startup released a chatbot service for general customers offering private conversations. During the services, private information, including addresses, has leaked. In addition, the chatbot was responsible for hate speech against minority groups, which spurred social controversies. Therefore, the service was closed and faced fines and lawsuits from victims for its duty of protecting private information. The incident drew a lot of attention, like the emergence of a new virus, and promoted a review of the Korean legal systems in a new light on the trustworthiness of AI services, and the startup updated its AI ethics guidelines, ethics checklist, and privacy statement to provide improved service.5

Related concept on trustworthy AI

It is not easy for AI systems to define abstract notions, “trustworthy” or “ethical,” in detail. In the end, trustworthy AI, responsible AI, ethical AI, etc., are used for similar goals for customer protection at a similar frequency.

The growing number of regulations and academic research on AI systems help many researchers to define the ethics of AI. The study by Jobin (2019) suggested how AI ethics or its similar concepts are used in the 84 pieces of literature regarding AI ethics guidelines published since 2016 through a meta-analysis. [Table 1]

The study showed the transparency of AI systems or its similar concepts have been discussed the most (73 out of 84 pieces of literature) as to what the meaning of transparency consists of. After transparency, justice or fairness was discussed. (68 among 84 literature)

| Ethical principle | Number of documents | Included Codes |

|---|---|---|

| Transparency | 73/84 | Transparency, explainability, explicability, understandability, interpretability, communication, disclosure, showing |

| Justice & fairness | 68/84 | Justice, fairness, consistency, inclusion, equality, equity, (non-)bias, (non-)discrimination, diversity, plurality, accessibility, reversibility, remedy, redress, challenge, access and distribution |

| Non-maleficence | 60/84 | Non-maleficence, security, safety, harm, protection, precaution, prevention, integrity (bodily or mental), non-subversion |

| Responsibility | 60/84 | Responsibility, accountability, liability, acting with integrity |

| Privacy | 47/84 | Privacy, personal or private information |

| Beneficence | 41/84 | Benefits, beneficence, well-being, peace, social good, common good |

| Freedom & autonomy | 34/84 | Freedom, autonomy, consent, choice, self-determination, liberty, empowerment |

| Trust | 28/84 | Trust |

| Sustainability | 14/84 | Sustainability, environment(nature), energy, resources(energy) |

| Dignity | 13/84 | Dignity |

| Solidarity | 6/84 | Solidarity, social security, cohesion |

Responsibility and ethics are generally ascribed to human beings, while trust is used for trustworthy technologies and tools and are relatively easy to define and measure the performance of with many already developed measurement tools. Therefore, a more scientific discussion is possible than a discussion about AI trustworthiness. However, this article does not distinguish between them and highlights AI ethics to consider AI systems as entities and links them to regulations.

Global standardization and technical status

Some developed countries introduced bills on the AI system ethics at a rapid speed. This spurred the growth of the international standards and technologies/solution markets, such as establishing indexes for standardizing risk prevention on AI services, monitoring for companies, etc.

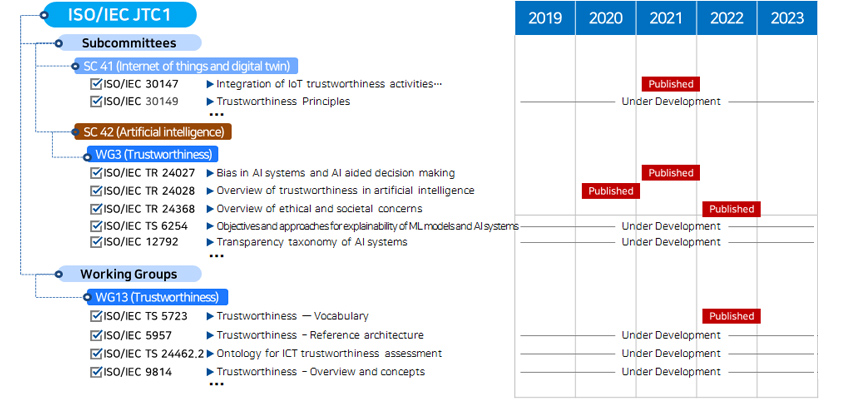

Look at the standardization trends first. The ISO/IEC has set a standard for trustworthy AIs and announced completed standards. [Figure 4] Companies can prepare future verification systems or inspections from external authorities by using these standards to set working standards necessary for developing and managing AI systems.

- Subcommittees

- SC 41 (Internet of things and digital twin)

- ISO/IEC 30147 -> Integration of IoT trustworthiness activities... / 2021 : published

- ISO/IEC 30149 -> Trustworthiness Principles / 2019 ~ 23 : Under Development

- SC 42 (Artificial intelligence)

- WG3 (Trustworthiness)

- ISO/IEC TR 24027 -> Bias in AI systems and AI aided decision making / 2021 : published

- ISO/IEC TR 24028 -> Overview of trustworthiness in artificial intelligence / 2020 : published

- ISO/IEC TR 24368 -> Overview of ethical and societal concerns / 2022 : published

- ISO/IEC TS 6254 -> Objectives and approaches for explainability of ML models and AI systems / 2019 ~ 23 : Under Development

- ISO/IEC 12792 -> Transparency taxonomy of AI systems / 2019 ~ 23 : Under Development

- Working Groups

- WG13 (Trustworthiness)

- ISO/IEC TS 5723 -> Trustworthiness — Vocabulary / 2022 : published

- ISO/IEC 5957 -> Trustworthiness – Reference architecture / 2019 ~ 23 : Under Development

- ISO/IEC TS 24462.2 -> Ontology for ICT trustworthiness assessment / 2019 ~ 23 : Under Development

- ISO/IEC 9814 -> Trustworthiness – Overview and concepts / 2019 ~ 23 : Under Development

There are two types of technologies for AI ethics.

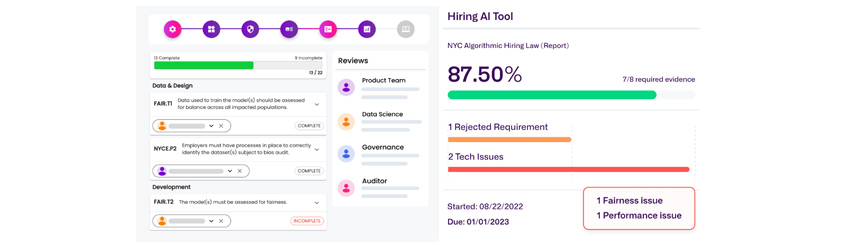

The first one is a measurement technology. The technology helps quantitatively evaluate AI ethics compliance at each step of data collection, learning, and evaluation to check AI systems' compliance of fairness, performance, robustness, and privacy protection. Incorporating the technology with MLOps can minimize possible risks with the system, which is already provided by many companies abroad. For example, a startup called Credo AI has released its Python-based source code as an open source called Credo Lens and provided an AI governance platform service in connection with CI/CD and monitoring apps. [Figure 5]

[Figure 5] AI ethics monitoring screen on Credo AI8

[Figure 5] AI ethics monitoring screen on Credo AI8

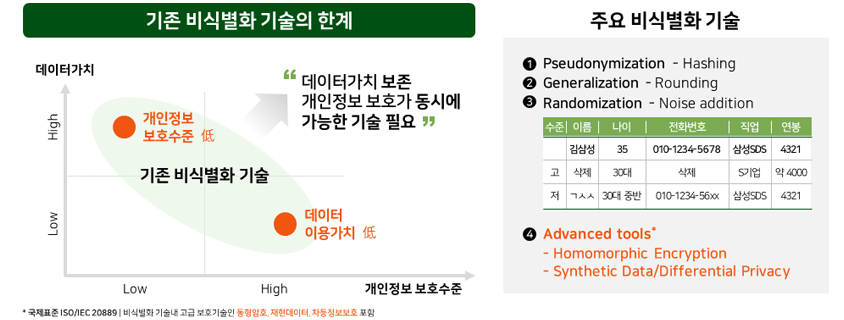

The second one is a leveraging technology to help provide ethical AI services. The examples include XAI (Explainable AI) to overcome the hardly understandable AI systems that function like a black box, and it improves transparency in learning, homomorphic encryption to enhance data security for private information protection, differential privacy, and synthetic data [Figure 6]. Applying the technology in the learning process can help create ethical AI models and establish a response system for user inquiries and claims to protect customer rights.

- Data value - high: Private information protection level - required / Technology to preserve data value and protect private information at the same time is required

- Data value, private information protection level - mid: Existing non-identification technology

- Private information protection level - high: Data value required / Technology to preserve data value and protect private information at the same time is required

- International Standard ISO/IEC 20889 | Includes homomorphic encryption, synthetic data, differential privacy, and advanced protection technologies among de-identification technologies

AI governance for social responsibility

Lastly, let’s discuss what companies should do to provide trustworthy AI and ethical AI services in ESG management. Abroad, major international platform companies, such as Google and Meta announce AI ethics first, then manage the risk of AI systems through an internal control framework. In Korea, leading companies and financial firms announced AI ethics guidelines, and they will develop AI services and work hard to protect consumers based on those ethics. [Figure 7]

[Figure 7] AI ethics charter (Samsung SDS)9

[Figure 7] AI ethics charter (Samsung SDS)9

It doesn’t seem to start from square one. Many large conglomerates and SMEs providing direct services to consumers, and financial companies handling a wide range of customer data, including MyData, have already set internal control systems and regular inspections from external authorities.

However, separate AI governance is required considering the special technologies of AI systems. For example, quality and security tests for existing applications are performed based on code inspection, while AI inference is inside the model, the black box area. Therefore, the existing method alone can't verify them enough.

Therefore, after the AI ethical principles are established, companies will need a thorough diagnosis to check whether their products, goods, and services with AI technologies comply with the fairness, performance, robustness, and privacy (differential privacy) standards discussed above. It is also the case that some things are overlooked due to technical specificity, and additional indexes or monitoring services that are needed in light of the AI ethical principles.

However, excessively strict internal regulations must not undermine companies’ development of innovative services with AI technologies. That is why the EU published the guidelines, instead of reviewed information for establishing the AI Act and strict regulatory measures provided by relevant government agencies, encouraging companies to create their standards and principles for self-regulation.

AI governance should be established, ensuring that companies’ intellectual property are not leaked and are protected through verified technology, that they are also suitable for the business characteristics, and that it does not harm the competitiveness of other companies. The technologies and global standards introduced previously will be a good starting point for solving these concerns, protect consumers, and prepare us for AI ethics management.

References

1. AI, H. (2019). High-level expert group on artificial intelligence. Ethics guidelines for trustworthy AI, 6.

2. 『Methods to Encourage the Use of Artificial Intelligence and Secure Trust』, Financial Services Commission press release, 2022. 8.4

3. Artificial intelligence act (europa.eu)

4. Kop, M. (2021, September). EU Artificial Intelligence Act: The European Approach to AI. Stanford-Vienna Transatlantic Technology Law Forum, Transatlantic Antitrust and IPR Developments, Stanford University, Issue.

5. Scatter Lab. Announce AI Chatbot Ethics Check List - ZDNet Korea

6. Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature Machine Intelligence, 1(9), 389-399.

7. SC 42 - JTC 1 (jtc1info.org)

8. https://www.credo.ai/solutions

9. https://www.samsungsds.com/kr/digital_responsibility/ai_ethics.html

▶ The content is protected by the copyright law and the copyright belongs to the author.

▶ The content is prohibited to copy or quote without the author's permission.

- Case Study: Samsung SDS Uses Retrieval Augmented Generation for Kubernetes Troubleshooting

- Global Tech Trends From Big Tech’s Keynotes

- Survival Strategies in the Meta World Transformed by AI

- Enterprise Readiness for Generative AI

- The Economics of AI: Cost Optimization Strategies for a Successful AI Business