AI's New Frontiers – Attention, Emergence, and Agentic AI

– Review of the Keynote Speech at the Korea Information Processing Society’s 2025 IT21 Global Conference

Young-june Gwon, head of Samsung SDS Research, delivered a keynote speech titled "From Attention to Language Models: Toward Agentic AI " at the 2025 IT21 Global Conference, organized by the Korea Information Processing Society.

Have you ever heard of the term "emergence"? In his keynote speech, Young-june Gwon explained the concept of emergence and its profound impact on the evolution of AI technology. He also shared an intriguing story about how the concept of agents appeared in a book written nearly 40 years ago.

Let’s take a closer look at the key highlights of his speech!

👉 Click the link for detailed information of IT21 Global Conference

The Attention Mechanism and the Foundation of AI Technology

The 2025 IT21 Global Conference of the Korea Information Processing Society, themed "Everything for AI, AI for Everything," was held at the Magellan Hall of Samsung SDS West Building from June 30 (Monday) to July 3 (Thursday).

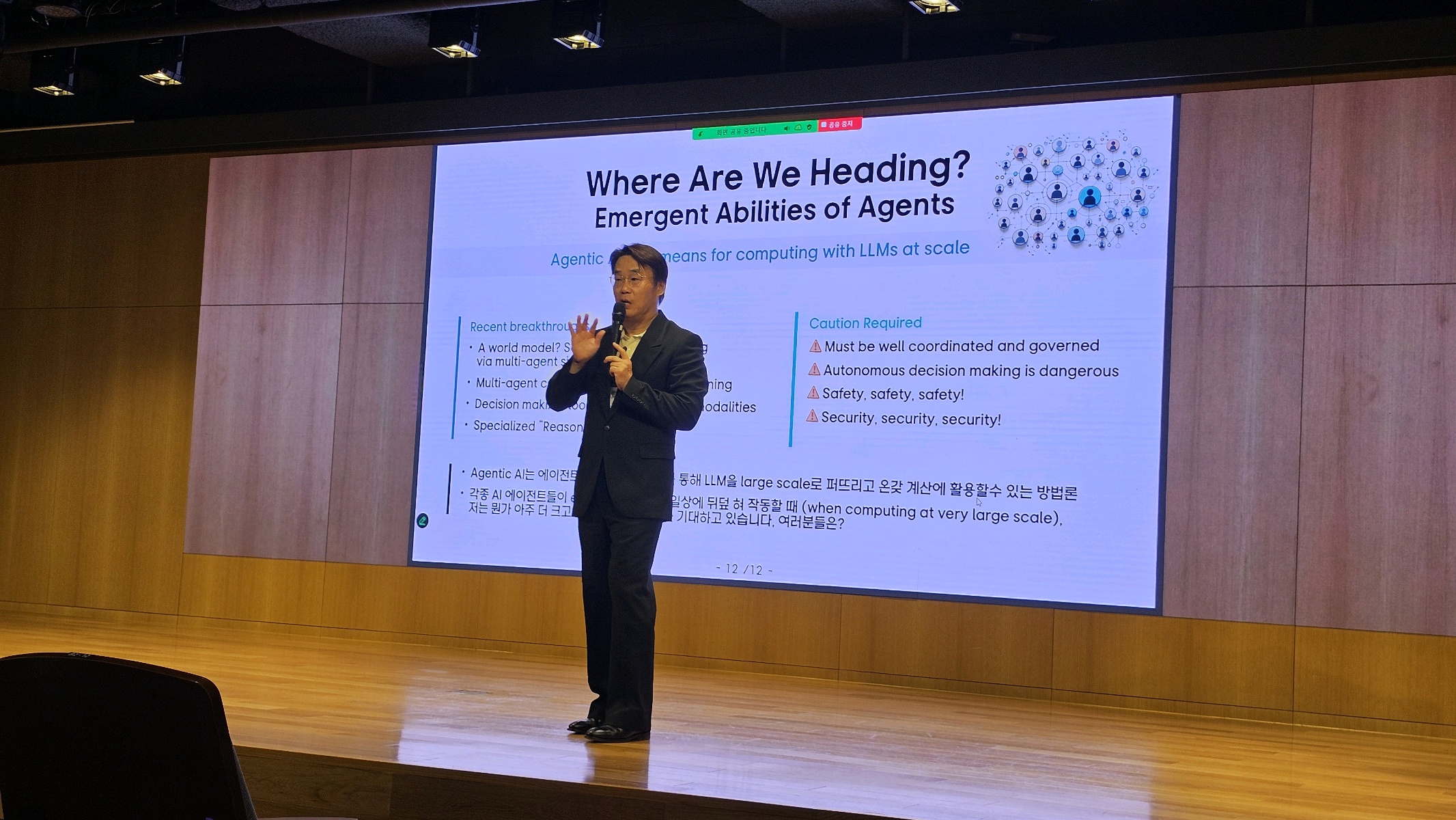

Young-june Gwon delivered a keynote speech titled "From Attention to Language Models: Toward Agentic AI" on July 1, offering attendees with valuable insights into the advancements in AI technology.

[Photo 1] Greeting the audience at the beginning of his keynote speech

[Photo 1] Greeting the audience at the beginning of his keynote speech

The keynote speech began with an exploration of the concept of Attention. Attention is a mechanism that computes correlations between all possible pairs of input words. This attention mechanism has enabled AI language models to learn not only vast amounts of information available online but also extend their understanding beyond it. Furthermore, significant advancements in computing power have been pivotal in delivering generative AI solutions, such as large-scale language models (LLMs). However, even with substantial computing power, the successful application of AI was not immediate.

‘The Power of 'Emergence'

[Photo 2] The presenter explaining the concept of Emergence

[Photo 2] The presenter explaining the concept of Emergence

[Photo 3] The attentive audience listens intently

[Photo 3] The attentive audience listens intently

So, what led to the successful application of AI? Young-june Gwon explained the concept of Emergence. The concept of ‘Emergence’ refers to phenomena or characteristics that arise in ways that were not predictable from their individual components or lower-level elements. In other words, it’s not simply about increasing the number of model parameters that drives AI advancement. Instead, it’s about the emergence of unexpected capabilities—such as reasoning, coding, solving mathematical problems, and creativity—that were not explicitly present in the training data, leading to a shift in paradigm! The transition from simple pattern matching to true understanding and reasoning, from task-specific AI technologies to general-purpose artificial intelligence, and from AI as a mere tool to AI as a collaborative partner—this is precisely the manifestation of Emergence in AI technology. It is through this power of Emergence that AI has been successfully utilized.

Marvin Minsky's Foresight on Agents?

In his keynote speech, Young-june Gwon defined 'Agentic AI' as a methodology that leverages agents to scale the deployment of LLMs and utilize them for diverse computational tasks. He explained that these agents facilitate capabilities such as decision-making, tool usage, and multi-modality* processing. Additionally, he highlighted the potential to enhance reasoning through collaboration among multiple agents.

*Modality: In AI, text, images, voice, and other types of data are each referred to as a modality.

Interestingly, the concept of agents dates back to Marvin Minsky's book, The Society of Mind (published in 1986). Minsky described the human brain as a network of specialized agents, each with specific functions, and stated that intelligence arises from their interactions (Network). Young-june Gwon also pointed out that Minsky had already outlined concepts such as communication, memory, and learning in The Society of Mind - areas that remain underdeveloped in current LLMs.

The Emergent Capabilities of Agents and the New Future

[Photo 4] Asking questions to the audience

[Photo 4] Asking questions to the audience

This is the last part of the keynote speech. Young-june Gwon stated that significant advancements would occur if LLMs were widely disseminated globally through individual agents. He expressed optimism that remarkable outcomes would emerge when individual agents transition to collaborative work through agent-to-agent collaboration, forming cooperative agents. He emphasized the importance of ensuring that such collaboration takes place in a secure and safe environment.

As we have witnessed new capabilities of large language models (LLMs) through the concept of 'emergence' discussed earlier, he pointed out that a next-level 'emergence' beyond LLMs would arise when diverse agents collaborate seamlessly within expansive computing environments, deeply embedded in both business operations and daily life. He concluded his keynote speech by posing a thought-provoking question to the audience: 'What do you think?

[One more piece of news! Session Presentations by Samsung SDS Researchers]

At the 2025 IT21 Global Conference, four researchers from Samsung SDS gave presentations during the session 'The Future of Computing: Already Here' (July 2).

(AI Research Team)

< Improving LLM Response Quality through Learning Data Self-Curation >

He presented several studies focused on enhancing the quality of training data used in the fine-tuning process of LLMs, and he introduced methods such as Self-Curation, which involves automatically selecting training data for preference optimization techniques like Reinforcement Learning or DPO.

👉 For more details on the paper review related to the presentation, click here.

(Cloud Research Team)

< PAISE: A PIM (Processing-in-Memory) Based LLM Inference Acceleration Scheduling Engine >

He presented findings demonstrating how PIM technology can enhance the inference speed of transformer-based LLMs by up to 48.3%. Additionally, he outlined methods that significantly improve the efficiency of AI application services, optimizing both performance and resource utilization.

👉 For more details on the paper review related to the presentation, click here

(Security Research Team)

< Research and Industrial Application of AI-Based Test Auto-Generation Technology >

He discussed the research and development of the latest EvoFuzz, which leverages large language models to enhance the readability of automatically generated unit tests and ensures compatibility with JDK 11 and JDK 17 for practical application in industrial environments.

👉 For more details on the paper related to the presentation, click here

(Security Research Team)

< Privacy-Enhancing Technologies and Use Cases >

He presented the concepts and operational principles of PSI (Private Set Intersection) and PIR (Private Information Retrieval) protocols, along with the latest research findings. Additionally, he highlighted real-world applications of these protocols, including Microsoft's Password Monitoring, Google's Password Checkup, and Apple's CSAM detection.