GPU Optimization and PIM Technology Driving Next-Generation Cloud Innovation

Key Points Summary

- Samsung SDS Cloud Research Team - Comprising three labs dedicated to computing systems, networks, and high-performance computing, the team is at the forefront of developing cloud technologies tailored for the AI era.

- GPU Efficiency and Next-Generation AI Infrastructure - The FireQ project, designed to optimize AI workloads, and ongoing research into next-generation AI accelerators enable the delivery of cost-effective and efficient computing resources.

- Technological Advancement through Global Collaboration - Through strategic partnerships with UC Berkeley's Sky Computing Lab, the team is pioneering efforts to make cloud and AI services more accessible and widespread to the public.

With over 25 years of experiencing the evolution of technological paradigms across five corporate research institutes, Minsung Jang, Cloud Research Team Leader, returned to Samsung after 13 years to embrace new challenges. In response to NVIDIA's 90% dominance in the RDMA (Remote Direct Memory Access) market, his team developed CORN (Cloud-optimized RDMA Networking), a groundbreaking solution. Furthermore, through strategic collaborations with UC Berkeley, the team is advancing efforts to democratize AI, pushing the boundaries of cloud technology. Now, let us introduce the future of cloud innovation as envisioned by Minsung Jang, a team leader leading the Cloud Research Team at Samsung SDS.

“I sensed the arrival of the cloud era through diverse experiences”

What experiences have you had before joining Samsung SDS’s Cloud Research Team?

I joined Samsung SDS in late August 2021. Before that, I worked at Samsung Electronics and Samsung Advanced Institute of Technology, and after earning my Ph.D. in the United States, I was at AT&T Labs Research. I seem to have a strong connection with Samsung, having worked at Samsung Electronics and later returning to Samsung SDS.

I joined Samsung Electronics in 2003 and contributed to system software development. It was a period of rapid growth for Samsung Electronics, and I had the privilege of witnessing the mobile phone business rise to become the world’s second largest.

However, after 2007, the paradigm of mobile products shifted toward more personalized computing. Eager to experience this global transformation firsthand, I decided to study abroad in the U.S., bringing my family along.

How did your experience studying in the U.S. influence your career?

Witnessing and participating in the shift of technological paradigms was a profound learning experience for me. During my Ph.D. studies at Georgia Tech around 2012-2013, the concept of SDN (Software-Defined Networking) began to take shape. Coincidentally, the company I was working for at the time was actively integrating SDN technology into its next-generation communication infrastructure. I was involved in research and development efforts to transform existing communication equipment into software that operates in the cloud, right at the forefront of this technological paradigm shift.

These experiences gradually led me to believe that “computing resources will increasingly converge and grow infinitely.” This conviction ultimately guided me to focus my career on cloud technology.

Minsung Jang giving an interview

Minsung Jang giving an interview

“Triad of computing systems, network, and high-performance computing”

What is the structure of Samsung SDS Cloud Research Team?

Our Cloud Research Team is structured around the three core components of cloud infrastructure. Computing Systems Research Lab oversees GPUaaS services and storage technologies on our SCP (Samsung Cloud Platform). It manages large-scale GPU resources essential for AI research and services, develops GPU platforms for AI engineers, operates clusters, and advances automation technologies for AI training workload management.

Network Research Lab focuses on the second pillar of cloud infrastructure—networks. It conducts research in both general cloud networks and high-performance networks leveraging RDMA technology.

What is RDMA?

RDMA (Remote Direct Memory Access) is a high-performance networking technology that enables direct memory access and data transfer between different systems over a network, bypassing the CPU and operating system. Traditional data transmission methods involve multiple memory copies and kernel-level interventions on both the sender and receiver sides, leading to increased latency and inefficient use of computational resources. RDMA eliminates these inefficiencies by enabling zero-copy memory-to-memory communication, resulting in significantly reduced latency and enhanced throughput.

Lastly, High-Performance Computing Lab is dedicated to research on architecture-aware computing, a field that develops software optimized for new hardware components in cloud environments. The primary objective is to ensure that software meets customer performance expectations while minimizing resource consumption and operational costs, ultimately achieving efficient computing solutions.

What is Architecture-Aware Computing?

Architecture-Aware Computing refers to a computing paradigm where software and algorithms are designed to understand and exploit the structure and characteristics of the underlying hardware architecture. Beyond mere code execution, it, this approach focuses on maximizing performance by taking into account factors such as CPU, GPU, memory hierarchy, cache, network bandwidth, parallelism, and data movement costs. It has become increasingly critical in heterogeneous computing environments, edge-cloud collaboration, and high-performance computing (HPC).

Minsung Jang engaging in a discussion about cloud technology with the lab leaders

Minsung Jang engaging in a discussion about cloud technology with the lab leaders(From left) Jong-sung Kim (Network Research Lab), Seung-hoon Ha (Computing System Research Lab), Minsung Jang (Cloud Research Team Leader), and Ki-hyo Moon (High-Performance Computing Research Lab).

“Both FireQ and CORN aim for ‘GPU optimization”

What are some of the key research projects undertaken by Cloud Research Team?

One notable project is FireQ. This initiative, denoted with a capital Q, is dedicated to delivering AI workload processing in a highly efficient and cost-effective manner for customers. It achieves this by intelligently analyzing AI workloads to optimize GPU scheduling, enabling the processing of more workloads on GPUs without compromising performance.

What is FireQ?

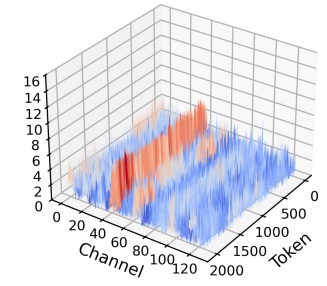

FireQ is a PTQ (Post-Training Quantization) framework developed by Samsung SDS’s Cloud Research Team for accelerating LLM inference. It incorporates an efficient GEMM (General Matrix Multiplication) kernel that leverages a hybrid precision approach, combining INT4 (4-bit integer data format) and FP8 (8-bit floating-point data format) for matrix computations. A key innovation is its optimization of the prefill stage in FlashAttention-3—a technique that minimizes memory reads/writes to enhance GPU-based Attention operation performance—through a 3-stage pipeline, significantly reducing token processing latency. Furthermore, FireQ utilizes channel-aware and RoPE (Rotary Positional Embedding)-aware scaling methods to mitigate accuracy loss resulting from quantization.

Image from FireQ: Fast INT4-FP8 Kernel and RoPE-aware Quantization for LLM Inference Acceleration

Image from FireQ: Fast INT4-FP8 Kernel and RoPE-aware Quantization for LLM Inference Acceleration

Another notable project is CORN, an acronym for Cloud-optimized RDMA Networking, creatively named after the term for corn. This technology is a networking solution specifically designed to efficiently interconnect GPUs. With NVIDIA’s InfiniBand currently holding a 90% monopoly in the RDMA market, CORN was developed with the aim of offering a distinct and tailored alternative from Samsung SDS.

What is CORN?

CORN (Cloud-Optimized RDMA Networking) is a cutting-edge RDMA networking technology developed by Samsung SDS, designed to optimize RDMA for cloud environments. It is a solution that enhances the benefits of existing RoCE (RDMA over Converged Ethernet) to better align with the demands of cloud environments. CORN is engineered to substantially minimize network latency and CPU load in GPU-accelerated large-scale AI model training and distributed processing, ensuring compatibility with essential cloud requirements such as multi-tenancy, virtualization, and security. CORN allows for the leveraging of RDMA's ultra-low latency and high bandwidth in cloud-native environments. We expect CORN to significantly improve training speeds when utilizing GPUaaS (GPU as a Service) for AI model training. While a full transition to CORN across the entire infrastructure is not yet possible, initial findings from small-scale tests are already demonstrating promising results, and we are optimistic about achieving favorable outcomes in the near future.

Minsung Jang explaining GPU optimization and next-generation cloud technology to the lab leaders

Minsung Jang explaining GPU optimization and next-generation cloud technology to the lab leaders (From left) Seung-hoon Ha (Computing System Research Lab), Minsung Jang, Ki-hyo Moon (High-Performance Computing Research Lab), Jong-sung Kim (Network Research Lab)

“Addressing GPU bottlenecks with PIM technology”

Could you elaborate on the recent PIM research that has been garnering attention?

PIM, or Processing In Memory, is a technology that embeds computing units directly within memory. In the current von Neumann architecture, data must be fetched from memory for computation, leading to inefficiencies. One of the key challenges with GPUs is a bottleneck that occurs during data transfer from memory to computation.

PIM resolves this issue by integrating computing units into the memory itself. This allows computations to occur naturally during data transfer. When data is read from memory, PIM intervenes to reduce the computational load on the GPU, enabling efficient workload distribution.

The research on PAISE (PIM-Accelerated Inference Scheduling Engine) that we presented at HPCA 2025 (a leading forum for introducing new ideas and research in computer architecture) aligns with this approach. To address bottlenecks in transformer-based LLMs' attention mechanisms, we proposed an integrated scheduling framework that optimally divides tasks between GPUs and PIM. This resulted in a maximum 48.3% improvement in LLM inference performance and an 11.5% reduction in power consumption.

What is PIM(Processing-In-Memory)?

PIM (Processing-In-Memory) is an innovative computing architecture that integrates computational capabilities directly into memory, eliminating the need to transfer data from memory to the CPU for processing. In traditional systems, large volumes of data must be continuously transferred from memory to the processor for computation, resulting in the ‘memory bottleneck’ and high-energy consumption. By contrast, PIM performs computations within the memory itself, offering a promising solution that can fundamentally address these issues.

Minsung Jang giving an interview

Minsung Jang giving an interview

“Researching cloud technology for humanity.”

How did the collaboration between Samsung SDS and UC Berkeley’s Sky Computing Lab, one of the most promising research groups in the computing field, begin?

The partnership with UC Berkley’s Sky Computing Lab originated from a shared vision with Professor Ion Stoica, who champions the philosophy of "innovation through open collaboration." As the founder of Databricks and a pioneer in cloud technology, Professor Stoica has developed groundbreaking data processing frameworks. His Sky Computing Lab is dedicated to creating accessible technologies that empower everyone to utilize cloud computing and AI.

However, the profound impact of cloud and AI technologies on humanity makes it challenging for a single research institution or tech giant to drive transformative change alone. As a result, Sky Computing Lab actively collaborates with organizations that share its mission. Samsung SDS, too, believes its cloud platform and AI services can play a pivotal role in fostering this collaborative innovation and advancing humanity’s progress. This shared commitment led Samsung SDS to join as a founding member.

UC Berkley Sky Computing Lab’s Official Website

UC Berkley Sky Computing Lab’s Official Website

Through this collaboration, two significant outcomes have been realized: vLLM and SkyPilot. Today, vLLM serves as a key software solution for AI services, adopted by leading companies such as Samsung SDS, Microsoft, and Meta. SkyPilot, on the other hand, is a tool designed to streamline the use of multi-cloud environments. Furthermore, we have completed integration work for Samsung Cloud Platform (SCP), enabling users to seamlessly utilize SkyPilot upon installation.

What are vLLM and SkyPilot?

vLLM is a high-performance LLM inference library developed by the Sky Computing Lab at UC Berkeley. It optimizes PagedAttention-based KV caching (a key technology for optimizing LLM inference efficiency), and leverages FlashAttention and CUDA graphs to achieve up to 24 times greater throughput than HuggingFace. SkyPilot, on the other hand, is an open-source execution and deployment framework designed to be cloud-agnostic. It simplifies the scalability of vLLM-based services by offering features such as automatic resource discovery, cost optimization, and support for multi-region and multi-cloud environments. Together, these tools empower research labs and enterprises to rapidly deploy and operate cost-effective, high-availability LLM services without the complexities of infrastructure management.

List of Collaborating Companies introduced on the UC Berkeley Sky Computing Lab’s Official Website

List of Collaborating Companies introduced on the UC Berkeley Sky Computing Lab’s Official Website

“Archive storage, a product directly commercialized by Samsung SDS Research”

Can you share a project from Cloud Research Team that you personally remember?

The archive storage commercialization project stands out. While Samsung SDS Research typically develops core technologies and transfers them to Business Divisions for commercialization, this project was exception in that Samsung SDS Research took an active role in product planning -

a departure from the usual process.

The project brought together a diverse team, including members from the Cloud Research Team, and the company’s Cloud Service Business Division’s business team, technical team, and operations team. We collaborated daily, functioning as a cohesive unit to develop the product.

Samsung SDS Research extended its involvement beyond its traditional scope, handling tasks such as pricing, system operation setup, and customer sales strategies. The experience mirrored the dynamic environment of a startup. Despite numerous challenges, the experience of collaborating closely to bring the product to market quickly remains a particularly memorable experience.

What is Samsung SDS Archive Storage service?

Archive Storage is a cost-efficient object storage solution tailored for long-term retention of large-scale data. It offers a lifecycle management feature that automatically migrates data exceeding user-defined criteria from Object Storage to Archive Storage. Furthermore, it allows seamless restoration of data back to Object Storage when required.

Samsung SDS Archive Storage Product Page

Samsung SDS Archive Storage Product Page

“Storage services that will be more naturally connected”

What do you envision the future of cloud technology to be in 10 years?

The history of computing reveals a recurring cycle of centralization and personalization. Initially, we relied on terminals connected to large mainframe computers that occupied entire buildings. This was followed by the rise of personal computers. Today, we are in the cloud era, where centralization is once again dominant, and AI services are provided through cloud environments.

Looking ahead, I anticipate that in the next decade, centralized cloud, edge computing, and mobile-based computing will coexist harmoniously. Cloud computing will remain indispensable for large-scale data processing and resource-intensive tasks, while mobile computing will handle personalized processing needs.

The most transformative change will be the seamless integration of these technologies from the user’s perspective. Users will no longer need to manually choose between cloud, edge, or mobile resources. Instead, AI-driven agents will autonomously make decisions and manage tasks across cloud and mobile environments. This evolution will lead to a computing ecosystem where the boundaries between cloud and mobile dissolve, enabling a naturally connected experience.

"Where research meets reality: Samsung SDS’s Cloud Research Team"

What values do you prioritize in leading a corporate research team?

Conducting research in a corporate setting inherently involves navigating the delicate balance between two seemingly conflicting priorities. On one hand, research must ultimately contribute to the company’s profitability. On the other hand, there is the intrinsic value of pursuing innovation. This creates a dilemma: acknowledging the importance of forward-looking research while ensuring it aligns with the company’s strategic goals.

Since beginning my career in a corporate research lab in 1999, I’ve come to firmly believe that achieving this balance is the cornerstone of corporate research. Take archive storage technology as an example: without proactive preparation of foundational technologies, delays in commercialization would have been unavoidable. However, thanks to Samsung SDS’s foresight and readiness, we were able to respond swiftly to seize market opportunities.

Innovative research must therefore be rooted in the intersection of business value and technological advancement. This is the essence of corporate research’s purpose. Additionally, maintaining technological superiority in core business areas is crucial. Falling behind in technological competitiveness can hinder long-term advantages in pricing, cost efficiency, and quality, ultimately making it challenging to lead the market. This is the philosophy I uphold.

Lastly, if there were researchers interested in joining Samsung SDS’s Cloud Research Team, what would you like to say to them?

The key strength of Samsung SDS’s Cloud Research Team is the environment where research outcomes can immediately be applied to business and real-world solutions. We go beyond publishing innovative technologies in papers; we also engage in the full process of developing and implementing tangible products into business operations. I believe this ability to bridge the gap between research and practical application is a defining feature of Samsung SDS Research.

Moreover, our primary focus is on addressing complex challenges faced by large enterprises. For researchers and engineers who thrive on solving intricate problems, this aspect of our work can be particularly appealing and rewarding.

Minsung Jang envisioning a future of continuously advancing cloud technologies.

Minsung Jang envisioning a future of continuously advancing cloud technologies.

Lastly, at Samsung SDS Research, Cloud Research Team collaborates closely with AI Research Team and Security Research Team, fostering an environment where researchers can learn and experience diverse IT technologies. In today’s interconnected era of AI and cloud, such cross-disciplinary experiences are essential. Samsung SDS’s Cloud Research Team is dedicated to driving innovation by developing cloud technologies that will continue to evolve and grow.

The vision of Samsung SDS’s Cloud Research Team goes beyond traditional infrastructure. The challenge of efficiently utilizing GPU - a critical resource in the AI age - extends beyond technical innovation to the value of democratizing AI. For more insights, visit Samsung SDS Technology Blog.